Research: Eye Tracking Analysis Using Convolutional Neural Network

Eye tracking plays a pivotal role in fixing the user interface allowing one to understand what a person is actually looking at while browsing through a webcam or external cam or using an infrared eye tracker etc., which depends on needs and conditions. With eye-tracking, one can easily test any video material, AD performance, package design concepts, product shelf placement, website performance, mobile websites, and apps. It can be the supreme technology in providing various insights into the processes which involve application into various fields of academics, science & technology, marketing, and other researchers. The goal of eye tracking is to detect and measure the point of gaze (where one is looking) or the motion of eye(s) relative to the head. This study examines the current state-of-the-art in deep learning-based gaze estimation algorithms, with a particular focus on Convolutional Neural Networks (CNN). Several studies are focusing on various approaches for dealing with different head pose and gaze estimation. Large-scale gaze estimate datasets with various head poses and illumination conditions were reported in the current study. We are building a model detecting if the eyes captured are right or left and detecting the gazing point and the aim is to solve the problem if they are accurate. This defined problem requires a method with high learning capacity which is able to manage the complexity of the given dataset. For the present study Convolutional neural network(CNN) has proved effective to get better results for the defined problem.

I. INTRODUCTION

Eye tracking is the method of tracking a person’s eye movement in order to determine where they are looking and how long they are looking. As eye tracking techniques necessitate a precise mechanism for detecting the eye gaze, new methodologies must be investigated in order to expand the usage of eye tracking on a larger scale. The recent success of deep learning in computer vision has been seen in various fields, but its impact on enhancing eye tracking performance has been limited [7]. Eye tracking is a very useful tool for any form of human behaviour research. It can be applied in a multitude of sectors, including transportation, education, visual systems, the economy, clinical research and education, psychology and neuroscience, psycholinguistics and healthcare, user experience and interaction, marketing, sports performance and research, virtual reality (VR), product design, and software engineering [43].

II. RELATED WORKS – LITERATURE SURVEY

Eyes and their movements are important in expressing a person’s desires, needs and emotional states. The motion and geometric characteristics of eyes are unique which makes pupil localization, gaze estimation, and eye tracking important for many applications such as biometric security, human attention analysis. Eye tracking systems detect regions of interest of the researcher by measuring the user’s eye position, movement, and pupil size at a certain time. The first approaches to measure a person’s gaze direction date back to the early 1900. Eye tracking is a useful means for any form of human behaviour research and also applied for driving fatigue alert systems, mental health screening and an eye-tracking powered wheelchair [42]. It can be used to evolve applications in a variety of fields, including healthcare and medicine, psychology, marketing, engineering, education, and gaming, as well as enhancing human–computer interactions by allowing users to navigate and control their devices with their eyes [17]. Eye tracking has proven to be advantageous in a variety of domains, particularly in the medical and diagnostic fields.

Marquez et al. proposed organized analysis on integration of Eye tracking technologies with other technologies, such as simulators, motion capture devices, and augmented reality, to better understand individuals’ gaze patterns during different scenarios [15]. They highlighted that eye tracking can be used in relation to different human aspects for a variety of applications and industries. Eye tracking research has an exciting future, particularly in light of potential technology integrations such as the integration of NIBS, VR, and AR. R Parte et al. [31] explored distinct eye tracking and eye detection methods together with instances of numerous applications using these techniques. In any field from scientific research to commercial applications eye tracking is gaining popularity as innovative tool. Accurately estimating human gaze direction has many applications in assistive technologies for users with motor disabilities, gaze-based human-computer interaction, visual attention analysis, consumer behaviour research, AR, VR and more. Different algorithms and techniques have been developed by researchers for eye tracking and gaze estimation which can be valuable in a various types of applications.

A. Eye tracking methods and techniques

Eye tracking concepts, methodologies, and strategies were studied and reviewed by A.F. Klaib et al. [34] by elaborating competent recent methods such as machine learning (ML), Internet of Things (IoT), and cloud computing. These methods have been everywhere for over two decades and have been actively employed in the improvement of modern eye tracking applications. Dario Cazzato et al. [7] gave a detailed account of analysis of the literature has been given, discussing how the great advancements in computer vision and machine learning have impacted gaze tracking in the last decade. Now a days use of deep learning in a variety of areas in computer vision has been clear, but its effect on increasing the performance of eye tracking has been quite limited.

B. Algorithms and Models

Ildar Rakhmatulin and Andrew T. Duchowski [3] examined a number of different deep neural network models that can be employed in the gaze monitoring process. They suggested a novel eye-tracking system that significantly increases the efficiency of deep learning methods. Horng and Kung[13] investigated the impact of employing different convolutional layers, batch normalisation, and the global average pooling layer on a CNN-based gaze tracking system. A novel method was developed for labelling the participant’s facial images as gaze points received from an eye tracker while watching videos in order to create a training dataset that is closer to human visual behaviour. C. Meng and X. Zhao described Webcam-Based Eye Movement Analysis Using CNN [23].

C. CNN for eye tracking

Fuhl et al. [6] shown that a naturally motivated pipeline of specifically designed CNNs for robust pupil detection outperforms state-of-the-art techniques. In three office activity recognition tasks, the innovative CNN-based model outperforms other state-of-the-art approaches. C. Meng and X. Zhao suggested a new CNN-based model for eye movement analysis [23]. By detecting the feature point and classifying the original time-varying eye movement signals using CNN, this model introduces a new method for eye movement analysis. Jonathan Griffin and Andrea Ramirez [4] concluded that CNN-based eye tracking model demonstrates that CNNs are a good tool when developing eye tracking systems for VR (Virtual Reality) and AR (Augmented Reality) (Augmented Reality systems). There is still a lot of work to be done in this area in order to fully utilise the benefits of CNNs.

Braiden Brousseau et al. [5] demonstrated hybrid eyetracking on a smartphone using CNN feature extraction and an infrared 3D Model. Herman [32]described a new tool for eyetracking data and their analysis with the use of interactive 3D models. Chaudhary et al. [25] designed a computationally efficient model for the segmentation of eye images. They proposed the RITnet model, which is a deep neural network that combines U-Net and DenseNet. They also presented methods for implementing multiple loss functions that can tackle class imbalance and ensures crisp semantic boundaries.

Michael Barz and Daniel Sonntag [44] implemented two methods for detecting visual attention using pre-trained deep learning models from computer vision for mobile eye tracking. Wei-Liang Ou et al. developed Pupil Center detection and Tracking Technology for Visible-Light Wearable Gaze Tracking Devices using deep-learning object detection technology based on the You Only Look Once (YOLO) concept [12]. S. Park et al. first time attempted an explicit prior designed for the task of gaze estimation with a neural network architecture. They introduced a novel pictorial representation which they called gazemaps [11].

S. Park et al. [11] introduced several effective steps towards increasing screen-based eye-tracking performance even in the absence of labelled samples or eye tracker calibration from the final target user. They identified that eye movements and the change in visual stimulus have a complex interplay.

Pas˘ aric˘ a, R. G. et al. [1] implemented and compared two˘ pupil detection algorithms: a parameter-based algorithm in the circular Hough transform (CHT) and a model and feature based algorithm in Starburst. Joni Salminen et al. [39] gave a detailed account about confusion prediction from eye tracking data by using fixation information from a user study as features for machine learning.

Hui Hui Chen et al. [24] investigated the impact of different convolutional layers, batch normalisation, and the global average pooling layer on a CNN-based gaze tracking system. They suggested a unique approach for labelling participant face images as gaze points acquired from an eye tracker while watching videos in order to provide a training dataset that is more representative of human visual behaviour. Luca Antonioli, et al. [22] implemented a fully automatic approach based on two stages convolutional neural networks (CNNs) for automatic pupil and iris detection in ocular proton therapy (OPT).

D. Machine learning for eye tracking

Machine learning object detection algorithm Haar Cascade was used by Dr. M. Amanullah and R. Lavanya to identify objects. The direction of where the driver is looking will be estimated according to the location of the driver’s eye gaze. The developed algorithm was implemented using Open-CV to create a portable system for accident prevention [35].

EllSeg, a basic 3-class complete elliptical segmentation framework introduced by Rakshit Kothari et al. [28], demonstrated improved ellipse shape and centre estimation when compared to the usual 4-class eye components segmentation for robust gaze tracking.

More recently, the technique has also been introduced into the field of survey methodology to study cognitive processes during survey responding. Cornelia Eva Neuert and Timo Lenzner gave a detailed account of to whether eye tracking is an effective supplement to cognitive interviewing in evaluating and improving survey questions[38]. Consuelo and Saiz-Manzanares presented an examination of the learning process based on the findings of eye tracking methods using statistical tests and supervised and unsupervised machine learning approaches, and compared the performance of each [10]. Zehui Zhan et al. [36] proposed multi-feature regularization machine learning mechanism to detect Online Learners’ Reading Ability Based on Eye-Tracking Sensors.

Bozomitu et al. [9] described the development of a human–computer interaction pupil detection algorithm based on eye tracking for real-time applications. Monika Kaczorowska et al. gave a detailed account on the assessment of cognitive workload level using selected three-class machine learning models for eye tracking features[47]. Alhamzawi Hussein Ali Mezher studied distribution and analysis of the gaze, utilizing it scope in tracking with the help of direction through the parts of face for emotion detection. In order to achieve this goal, the eyesight detection system has been developed, which tested the analysis of the pupils that were included in this study [27]. Erik Lind presented SPAZE, a method for calibrated appearance-based gaze tracking. SPAZE achieves state-of theart results on MPIIGaze and is as accurate as model-based gaze tracking on high-resolution, near-infrared image[26]. AlHameed and Guirguis reviewed the latest growth in noncontacting video-based gaze tracking[17]. K. Raju proposed a model for gaze tracking using a web camera in a desktop environment by integration of the eye vector and the head movement information[16]. Clay and Konig described and discussed a method to easily track eye movements inside of a virtual environment [2]. Sharma et al. [45] investigated stimuli-based gaze analytics to enhance motivation and learning through Eye-tracking and artificial intelligence.

E. Applications of eye tracking

Khan and Lee highlighted the uses of eyes and gaze tracking systems in advanced driver assistance systems (ADAS), as well as the technique of acquiring driver eyes and gaze data and the algorithms used to interpret this data. It is discussed how data connected to a driver’s eyes and gaze may be used in ADAS to decrease losses associated with road accidents caused by the driver’s visual distraction [29]. Nguyen and Chew [37] used an Eye Tracking System to identify driver drowsiness using a combination of the ViolaJone salgorithm and the PERCLOS technique. Moreno-Esteva et al. [20] presented a study on application of mathematical and machine learning techniques to analyse eye tracking data enabling better understanding of children’s visual[40] cognitive behaviours.

HU et al. [18] presented a detailed review on recent techniques for eye detection and gaze estimation along with advantages and disadvantages of these systems. Aurlie Vasseur, et al. provided broadly furnished literature review about eyetracking for Information Systems (IS) research[46]. Eraslan and Yesilada executed an investigation which shows the effects of the number of users on scan path analysis with their algorithm that was designed for identifying the most followed path by multiple users[14]. Their experimental results suggest that it is possible to approximate the same results with a smaller number of users. The results also suggest that more users are required when they serendipitously browse on web pages in comparison with when they search for specific information or items [19].

Eye detection and tracking has been an active research field in the past years as it adds convenience to a variety of applications. Eye-gaze tracking is been considered as untraditional method of Human Computer Interaction. Eye tracking is considered as the easiest alternative interface method. For eye tracking and eye detection several different approaches have been proposed and used to implement different algorithms for these technologies. The latest literature survey supports the need of the advancement in this area. The same principle is followed by choosing eye tracking technologies and by narrowing the topic very specifically to benefit wide spectrum of application. More research work is needed to make eye tracking reliable for many real-world applications.

III. PROPOSED WORK

IV. IMPLEMENTATION

A. Materials and methods used

Researchers have proposed many algorithms and techniques to automatically track the gaze position and direction, which can be useful in a variety of applications[42]. The algorithms used in this study varies a lot from classic edge detection, ellipse fitting, low level vision to modern methods using deep learning techniques like CNN, DNN, OpenCV to name a few. Opencv is an open-source computer vision and machine learning software library which provides a common infrastructure for computer vision applications.

Deep neural networks (DNN) algorithm in general with regards eye tracking can be a powerful tool in predicting key points[13]. However, for the gazecapture dataset and as part of our research DNN will be helpful in classifying images as valid or invalid and combined with opencv which helps in preprocessing images like performing the edge detection[21], ellipse fitting, rotations, blur, exposure, reflection and compression and also prediction 😡 point and y point.

A brief is as below with regards X & Y co-ordinates: X, Y: Position of the top-left corner of the bounding box (in pixels). In appleFace.json from dataset, this value is relative to the top-left corner of the full frame; in appleLeftEye.json and appleRightEye.json from dataset, it is relative to the topleft corner of the face crop. W, H: Width and height of the bounding box (in pixels). IsValid: Whether or not there was actually a detection. 1 = detection; 0 = no detection. CNNs are widely used image analysis machine learning technologies. These are the suitable models which are having a high learning capacity that can manage the complexities of tasks involving too large problems which are completely specified in any dataset[41]. We used convolutional neural networks (CNNs) [30] to solve the challenge of eye tracking because of their recent success in computer vision. For gaze capture data it is used to extract key features from the images like details about eyelid, pupil, and area of the eye. After reviewing previous work in the field of image classification, CNN has emerged as the top priority, as it has consistently shown positive results over time[42]. As a result, we decided to employ CNN to solve our image classification challenge.

A. Dataset, data collection, data pre-processing

The dataset we have chosen is Gazecapture (2016) which is crowdsourced with Ipad app, and this app ensured that they didn’t just rely on people’s honesty but had a built in mechanism to ensure people were gazing in the right direction. To collect the data, we need to register in the portal. For registration corporate email id is mandatory. Once registration is done dataset can be downloaded by the link provided. The link is valid for limited period. Dataset link is as follows: https://gazecapture.csail.mit.edu/index.php [44].

The challenge we faced is that it’s a huge dataset both in terms of data and size of the compressed file. There are 2,445,504 total frames and 1,490,959 with complete Apple detections. It requires stable internet connection to download the huge file and several hours on extraction as well. Also, distribution of the dataset is a road blocker for us. As sample we are taking approximately 50000 images for training. The data downloaded is folder wise and, in each folder, there are frames and each are numbered.

As data is huge, in our training model we have only made use of frames where the subject’s device was able to detect both the user’s face and eyes using Apple’s built-in libraries. Some subjects had no frames with face and eye detections at all.

B. Data Set Results Summary

For Training we have taken Euclidean distance as our loss function. After training the model with our clean dataset we got the training loss 0.4758. Also, we got 1.2335 as our validation loss. The table below provides a detail about the data set used in the analysis.

TABLE I: DETAILS OF THE DATASET USED IN THE ANALYSIS.

| Analysis | Collection | Number of Images | Characteristics |

|---|---|---|---|

| 1 | Gaze capture | 2327 images are considered for EDA and of which 37 images are not valid | The dataset contains 50000 samples |

| 2 | Gaze capture | 48000 samples for training and 5000 images for evaluation | From the original gaze capture dataset |

C. EDA - Face, face grid, left and right eye detection

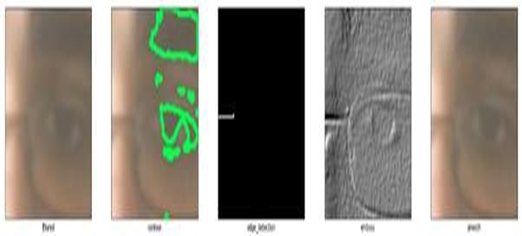

1) Analysis 1: Images extracted have been transformed using methods of blurring, contour, edge detection, emboss and smooth. emboss and smooth.

For our initial model we have extracted both eyes area with the help of the eye’s area given in the dataset. Colour distribution graph of left and right eye-

Inference: In both the images intensity of blue colour is higher and it signifies that in any further steps we can filter out/mask only those eyes within specific colour range. The scaling has been performed to get better computation.

2) Analysis 2: The images are cleaned by OpenCV library. To detect the blurry images, we have used cv2.Laplacian method.

To detect the dark images, we have used below formula

D. Gaze Prediction and Evaluation of the Models

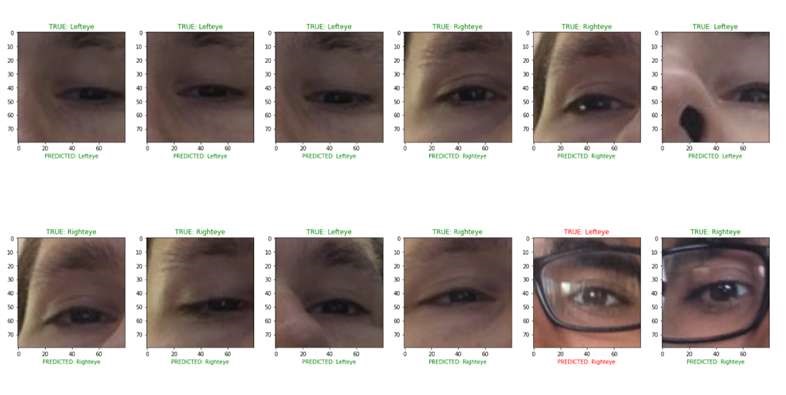

As a part of our EDA[8], we have created a prediction model using CNN to check the images quality to detect the eye, if it is left or right eye. Eye detection analysis is mainly performed so it can help in better prediction of the gaze point. Our final model is built to capture the gaze point accuracy and the accuracy is measured in Euclidean distance – the distance between the actual gaze and predicted gaze point.

1) Analysis 1: Our EDA model was built using the dataset to identify left eye and right eye. The model was able to show a better accuracy of 98% except the extreme cases like either the image is dark or it’s too hazy or the image is partially visible in those cases our EDA model not able to separate.

2) Analysis 2: To prepare the data we have extracted the face, left and right eye from the full image. As well as we have Fig. 8: Training and Validation Loss created the face mask from the face coordinates.

To train the model we have taken four Input layer that left eye, right eye, face and masking area of the face in a 25 X 25 grid. The Input shape for both the eyes and face is 64 X 64 X 3. The model architecture we have followed as same as the original paper. We have trained the model for 100 epochs with batch size of 128.

V. RESULTS AND DISCUSSION

The initial step was to identify both eyes and categorise the gaze location of the eyes as valid or invalid. The next step was to build the model that would detect the gazing point, with minimal errors. In the next phases, the attempt was made to develop the model with accuracy while focusing on improvements.

Subset of images has been used from the main dataset as a sample for the study. Some of images from the dataset have less light, therefore the dark images have been removed, as well as the blurred frames too, to get a clean dataset.

Two separate models tested, one determining the accuracy of left and right eye and another the gaze point. Among the two dataset samples utilised, its understood that there is still scope for improvement with the use of transfer learning for the gaze point.

Analysis 1: Our predictions shown significantly accurate detection of left eye and right eye with good results: with Training accuracy of 97% and testing accuracy of 98%. Training loss – 0.07 and testing loss – 0.05 respectively. Most of the eyes predicted are very close to the actual. Some prediction images below: with good results: Training accuracy of 97% and testing accuracy of 98%. Training loss – 0.07 and testing loss – 0.05 respectively.

Analysis 2: From Epochs vs Loss function data, it can be seen that, validation loss has reached to saturation point and line becoming flat. From the training loss, it can be concluded that our model is slightly overfitted.

In this work, the model has been developed to determine whether the captured eyes are right or left, as well as the gazing location using CNN. Final model is designed to capture gaze point accuracy, which is expressed in Euclidean distance (the distance between the actual and predicted gaze points). Because of the recent development in deep learning, gaze estimation models based on Convolutional Neural Networks (CNN) are becoming more popular and prevalent [42]. The analysis of latest CNN-based gaze estimation algorithms yielded valuable and practical insights that have aided in the development of more effective and efficient deep learning-based eye-tracking models. Various network architectures have been proposed in the literature. Some researchers suggested networks that just receive eye images, while others proposed networks that accept both eyes and a head posture feature vector. For gaze estimation, recent research integrated full face images with CNN models.

Deep learning’s recent success in computer vision has been seen in a range of areas but its impact on enhancing eye tracking performance has been restricted [33]. Over the last decade, Convolutional Neural Networks have achieved ground-breaking results in a range of pattern recognition disciplines, ranging from image processing to voice recognition.

Until adequate and favourable circumstances exist, all the algorithms for eye gaze tracking applications can be used effectively. It is our hope that by referring our model, researchers across a range of fields will be able to better leverage gaze as a cue to improve vision-based understanding of human behaviour.

VI. CONCLUSIONS

The major learnings from process are how to work on a problem from planning to collecting the data. Analysing the data on different phases. Also handling the huge number data is also a major challenge for us. We experienced how to deal and make progress on real time scenario in the industry based on our knowledge and study.

The research wouldn’t be complete without mentioning the limitations we faced during the process. The model suffers when it’s found dark images, or the incoming images is hazy or blurry in those cases model will not be able pick the gaze location. Also, it’s will be difficult to pick the gaze for people having natural eye issues like different type Crossed eye problem (Strabismus, i.e misaligned eye).

One of the challenges of deep learning-based gaze estimation models is the lack of balanced and variable largescale datasets. The training data from each device should also have a good representation of variability in appearance, head pose, facial expression, illumination, and orientation. Lack of balanced and variable large-scale datasets are the limitations for the deep learning-based gaze estimation models. Variability in appearance, head pose, facial expression, illumination, and orientation in the training data of each device is much needed.

Some datasets do not contain all of the information required to build reliable gaze estimate models. Thirty percent of the frames in the GazeCapture dataset were incorrectly collected; they lack both face and eye detections [41].

Every analysis of research has constraints, which serve as the foundation for future research. The performance of the various pre-trained models varies depending on the gaze estimation task. The accuracy of gaze estimating algorithms has been impacted significantly with the size of training datasets. When trained on a huge amount of datasets, CNN models provide extremely good outcomes in general. The images in the dataset are with different head poses and facial expressions[43].

One of the objectives of this research is to offer the research community a thorough reference point suited for improving eye gaze estimation algorithms. This research provided a glimpse of prospective paths to overcome the limitations by utilising new deep learning architectures that have not yet been widely used for gaze tracking. AlexNet, VGG-16, LeNet, ResNet, SqueezeNet are among the most popular network architectures that can be fine-tuned for gaze estimation. The results of this study will provide valuable baseline for the other researchers to develop and improve gaze estimation techniques.

REFERENCES

- A. Pas˘ aric˘ a, R. G. Bozomitu, V. Cehan, and R. G. Lupu, “Pupil˘ Detection Algorithms for Eye Tracking Applications,” no. January 2018, 2015, doi: 10.1109/SIITME.2015.7342317.

- V. Clay, P. Konig, and S. K¨ onig, “Eye Tracking in Virtual Reality Eye¨ Tracking in Virtual Reality,” no. April, 2019, doi: 10.16910/jemr.12.1.3.

- I. Rakhmatulin and A. T. Duchowski, “Deep neural networks for lowcost eye tracking,” Procedia Comput. Sci., vol. 176, no. October, pp. 685–694, 2020, doi: 10.1016/j.procs.2020.09.041.

- J. Griffin and A. Ramirez, “Convolutional Neural Networks for Eye Tracking Algorithm,” arXiv Prepr. arXiv1612.04335, 2018, Online. Available: http://stanford.edu/class/ee267/Spring2018/report griffin ramirez.pdf.

- B. Brousseau and J. Rose, “Hybrid Eye-Tracking on a Smartphone with CNN Feature Extraction and an Infrared 3D Model,” pp. 1–21, 2020, doi: 10.3390/s20020543.

- W. Fuhl, T. Santini, G. Kasneci, and W. Rosenstiel, “based real time Robust Pupil Detection.”

- D. Cazzato, M. Leo, C. Distante, and H. Voos, “When I Look into Your Eyes : A Survey on Computer Vision Contributions for Human Gaze Estimation and Tracking,” 2020, doi: 10.3390/s20133739.

- M. Kaczorowska, “brain sciences Interpretable Machine Learning Models for Three-Way Classification of Cognitive Workload Levels for EyeTracking Features,” 2021.

- R. G. Bozomitu, P. Alexandru, T. Daniela, and C. Rotariu, “for RealTime Applications,” pp. 20–23, 2019.

- I. Ramos, “applied sciences Analysis of the Learning Process through Eye Tracking Technology and Feature Selection Techniques,” 2021.

- S. Park, A. Spurr, and O. Hilliges, “Deep Pictorial Gaze Estimation,” no. August 2019, 2018, doi: 10.1007/978-3-030-01261-8.

- W. Ou, T. Kuo, C. Chang, and C. Fan, “applied sciences Deep-LearningBased Pupil Center Detection and Tracking Technology for VisibleLight Wearable Gaze Tracking Devices,” 2021.

- M. Horng, H. Kung, and C. Chen, “Deep Learning Applications with Practical Measured Results in Electronics Industries,” no. 2, pp. 1–8, 2020.

- N. Darapaneni, B. Krishnamurthy, and A. R. Paduri, “Convolution Neural Networks: A Comparative Study for Image Classification,” in 2020 IEEE 15th International Conference on Industrial and Information Systems (ICIIS), 2020, pp. 327–332.

- D. Martinez-marquez, S. Pingali, K. Panuwatwanich, and R. A. Stewart, “Application of Eye Tracking Technology in Aviation , Maritime , and Construction Industries : A Systematic Review,” pp. 1–40, 2021.

- K. Raju, “Eye Gaze Tracking With a Web Camera in a Desktop Environment,” vol. 03, no. 13, pp. 1230–1235, 2016.

- M. R. Al-hameed and S. K. Guirguis, “Survey of Eye Tracking Methods and Gaze Techniques,” vol. 9, no. 7, pp. 363–368, 2020, doi: 10.21275/SR20625194329.

- J. Hu, Y. Xing, L. Liu, X. Zhang, Q. Li, and J. Chi, “Survey on Key Technologies of Eye Gaze Tracking,” no. Aice, 2016.

- S. Eraslan, Y. Yesilada, and S. Harper, “Eye tracking scanpath analysis on web pages : how many users? Eye Tracking Scanpath Analysis on Web Pages : How Many Users ?,” 2016, doi: 10.1145/2857491.2857519.

- E. G. Moreno-esteva, S. L. J. White, J. M. Wood, and A. A. Blac, “Application of mathematical and machine learning techniques to analyse eye tracking data enabling better understanding of children ’ s visual cognitive behaviours,” vol. 6, no. 3, pp. 72–84, 2018.

- N. Darapaneni et al., “Automatic face detection and recognition for attendance maintenance,” in 2020 IEEE 15th International Conference on Industrial and Information Systems (ICIIS), 2020, pp. 236–241.

- L. Antonioli et al., “Convolutional Neural Networks Cascade for Automatic Pupil and Iris Detection in Ocular Proton Therapy †,” pp. 1–14, 2021.

- C. Meng and X. Zhao, “Webcam-Based Eye Movement Analysis Using CNN,” vol. 5, pp. 19581–19587, 2017.

- H. Chen, B. Hwang, J. Wu, and P. Liu, “algorithms The Effect of Different Deep Network Architectures upon CNN-Based Gaze Tracking,” 2020.

- A. K. Chaudhary et al., “RITnet : Real-time Semantic Segmentation of the Eye for Gaze Tracking,” pp. 1–5.

- E. Lind, J. Sj, and A. Proutiere, “Learning to Personalize in AppearanceBased Gaze Tracking.”

- H. For, T. H. E. Degree, O. F. Doctor, O. F. Philosophy, N. Of, and C. O. F. Informatics, “GAZE TRACKING FOR EMOTIONAL DETECTION AlHAMZAWI HUSSEIN ALI MEZHER Supervisor : FAZEKAS ATTILA,” 2020.

- R. Kothari, A. K. Chaudhary, R. J. Bailey, J. B. Pelz, and G. J. Diaz, “EllSeg : An Ellipse Segmentation Framework for Robust Gaze Tracking.”

- M. Q. Khan and S. Lee, “Gaze and Eye Tracking : Techniques and Applications in ADAS,” 2019, doi: 10.3390/s19245540.

- N. Darapaneni et al., “Activity & emotion detection of recognized kids in CCTV video for day care using SlowFast & CNN,” in 2021 IEEE World AI IoT Congress (AIIoT), 2021, pp. 0268–0274.

- R. S. Parte, G. Mundkar, N. Karande, S. Nain, and N. Bhosale, “A Survey on Eye Tracking and Detection,” pp. 9863–9867, 2015, doi: 10.15680/IJIRSET.2015.0410076.

- L. Herman, “Eye-tracking Analysis of Interactive 3D Geovisualization,” vol. 10, no. 3, pp. 1–15, 2017.

- A. Khan, A. Sohail, U. Zahoora, and A. S. Qureshi, “A survey of the recent architectures of deep convolutional neural networks,” Artif. Intell. Rev., vol. 53, no. 8, pp. 5455–5516, 2020, doi: 10.1007/s10462-02009825-6.

- A. F. Klaib, N. O. Alsrehin, W. Y. Melhem, H. O. Bashtawi, and A. A. Magableh, “Eye tracking algorithms , techniques , tools , and applications with an emphasis on machine learning and Internet of Things technologies Eye tracking algorithms , techniques , tools , and applications with an emphasis on machine learning and Internet of Things technologies,” Expert Syst. Appl., vol. 166, no. March, p. 114037, 2021, doi: 10.1016/j.eswa.2020.114037.

- M. Amanullah and R. Lavanya, “Accident Prevention By Eye-Gaze Tracking Using Imaging Constraints,” vol. 9, no. 02, pp. 1731–1735, 2020.

- Z. Zhan, L. Zhang, H. Mei, and P. S. W. Fong, “Online learners’ reading ability detection based on eye-tracking sensors,” Sensors (Switzerland), vol. 16, no. 9, 2016, doi: 10.3390/s16091457.

- T. P. Nguyen, M. T. Chew, and S. Demidenko, “Eye tracking system to detect driver drowsiness,” ICARA 2015 - Proc. 2015 6th Int. Conf. Autom. Robot. Appl., no. April, pp. 472–477, 2015, doi: 10.1109/ICARA.2015.7081194.

- C. E. Neuert and T. Lenzner, “Incorporating eye tracking into cognitive interviewing to pretest survey questions,” Int. J. Soc. Res. Methodol.,vol. 19, no. 5, pp. 501–519, 2016, doi: 10.1080/13645579.2015.1049448.

- J. Salminen, “Confusion Prediction from Eye-Tracking Data : Experiments with Machine Confusion Prediction from Eye-Tracking Data : Experiments with Machine Learning,” no. November, 2019, doi: 10.1145/3361570.3361577.

- N. Darapaneni, R. Choubey, P. Salvi, A. Pathak, S. Suryavanshi, and A. R. Paduri, “Facial expression recognition and recommendations using deep neural network with transfer learning,” in 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), 2020, pp. 0668–0673.

- K. Krafka et al., “Eye Tracking for Everyone,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2016-December, pp. 2176–2184, 2016, doi: 10.1109/CVPR.2016.239.

- P. Kanade, F. David, and S. Kanade, “Convolutional Neural Networks(CNN) based Eye-Gaze Tracking System using Machine Learning Algorithm,” Eur. J. Electr. Eng. Comput. Sci., vol. 5, no. 2, pp. 36–40, 2021, doi: 10.24018/ejece.2021.5.2.314.

- A. Akinyelu and P. Blignaut, “Convolutional Neural Network-Based Methods for Eye Gaze Estimation: A Survey,” IEEE Access, vol. 8, pp. 142581–142605, 2020, doi: 10.1109/ACCESS.2020.3013540. [44] https://gazecapture.csail.mit.edu/index.php

- M. Barz and D. Sonntag, “Automatic Visual Attention Detection for Mobile Eye Tracking Using Pre-Trained Computer Vision Models and Human Gaze,” pp. 1–21, 2021.

- K. Sharma, M. Giannakos, and P. Dillenbourg, “Eye-tracking and artificial intelligence to enhance motivation and learning,” 2020.

- A. Vasseur, P.-M. Leger, and S. S´ en´ ecal, “Eye-tracking for IS Research:´ A Literature Review,” 2019 Pre-ICIS Work., no. March 2020, pp. 13–18, 2019.

- M. Komorowski, D. C. Marshall, J. D. Salciccioli, and Y. Crutain, “Secondary Analysis of Electronic Health Records,” Second. Anal. Electron. Heal. Rec., no. October, pp. 1–427, 2016, doi: 10.1007/9783-319-43742-2.