Early Detection of Skin Cancer - Solution for Identifying and Defining Skin Cancers using AI

Skin Cancer is seen as one of the most hazardous forms and common types of cancer in the world. Each year there are approximately more than 10 million new cases of skin cancer recorded globally – this number is alarming. The survival rate is very low if diagnosed in later stages. Artificial Intelligence can play a very important role in using Medical Image Diagnosis to detect this disease in early stages. However, the AI systems for the classification of different skin lesions, are still in the very early stages of clinical application in terms of being ready to aid in the diagnosis of skin cancers. Moreover, there are not many players who are doing research in this direction for conditions specified in the Indian subcontinent. The present paper focusses on advancement in AI solutions in digital image based computer vision for the diagnosis of skin cancer, Some of the challenges and future opportunities to improve the ability to diagnose skin cancer in early stages have also been discussed. Using the HAIS AI tool, we present a computer-aided method, using computer vision and image analysis algorithms for Skin Cancer diagnosis, with improved accuracy. Our solution is focused on the Indian sub-continent and envisions catering to varied business needs that provide flexibility on its adoption and use.

I. INTRODUCTION

Cancer is the out-of-control growth of abnormal cells. Cancer of skin is manifested by such growth in the epidermis, caused by unrepaired DNA damage that triggers mutations. These mutations lead the skin cells to multiply rapidly and form malignant tumours. The main types of skin cancer are Merkel cell carcinoma (MCC), melanoma, squamous cell carcinoma (SCC), and basal cell carcinoma (BCC). Very high exposure to sun’s harmful ultraviolet (UV) rays and the use of UV tanning beds[3] are the two main factors which increase the possibility of having skin cancer Fortunately if skin cancer is caught early, it can be treated with little or no scarring and it may even be eliminated entirely. The doctor may even detect the growth before it has become a full-blown skin cancer or penetrated below the surface of the skin.

During a skin cancer screening, a dermatologist makes use of a dermatoscope to check your moles as well as check through the medical history of the patient. Skin biopsy is the main test needed to diagnose and determine the extent of skin cancer.[1] Biopsy needs removing either a small amount of tissue from the suspected skin lesion or removing the entire suspicious mole and examining it under a microscope to determine if it’s cancerous.. However, it is shown in a cited reference that even a trained dermatologist has an accuracy of less than 80% in correctly diagnosing the skin cancer.[4] .In addition to this inaccuracy, there are not many skilled dermatologists available globally in public healthcare. [5]

The potential discovery of artificial intelligence (AI) to improve skin care detection has led to an increasing application of AI techniques such as deep learning, computer vision[18], natural language processing, and robotics. However, the use of AI algorithms in Indian healthcare context is limited to certain specialised treatment and therefore we see a huge prospect in terms of technology adoption, especially in early detection of skin cancer in patients. Since the cost of diagnosis is very high, image based predictive model will be very useful for doing the initial prediction and initiating a need to seek expensive and specialised doctor treatment. Saving time and reducing latency, will be the best solution to the problem. More than 60% of the diagnosis is based purely on pathological study, the use of Computer Vision and Convolution Neural Networks may be used even for processing images of samples. The use of machine learning in general and deep learning in particular within healthcare is still in its infancy, but there are several strong initiatives across academia, and multiple large companies are pursuing healthcare projects based on machine learning. Not only medical technology companies, but also technology giants like Google Brain [6], DeepMind [7], Microsoft [8] [25] and IBM [10] are working in this direction. Also, there are plethora of small and medium-sized businesses coming forward in this field.

Deep Learning became an important tool in skin cancr imaging with the advent of convolutional neural networks (CNNs), a powerful way to learn useful representations of images and other structured data. As the use of Convolutional neural network (CNN) has made possible to use features learned directly from the data, many of the handcrafted image features were typically rendered obsolete as they turned out to be ineffective as compared to feature detectors found by CNNs.

In effect Computer Vision approach is now being widely used for skin cancer detection. For segmentation of skin lesion in the input image, existing systems either use manual, semi-automatic or fully automatic border detection methods. The features to perform skin lesion segmentation used in various papers are: Shape, colour, texture, and luminance are being used as the features to perform skin lesion segmentation.. Histogram thresholding, global thresholding on optimised colour channels followed by morphological operations, hybrid thresholding are also being used as methods to depict.Different image processing techniques have been used to extract such features like the ABCD rule of dermoscopy, which suggests that asymmetry is given the most prominent among the four features of asymmetry, border irregularity, color and diameter.

II. CNN Methods

In simple terms, a Convolutional Neural Network (ConvNet/CNN) is a Deep Learning algorithm which can take in an input skin image, assign importance (learnable weights and biases) to various aspects/objects in the image and be able to differentiate one from the other. [11] A CNN has multiple layers of convolutions and activations, often pooling layers are interspersed within these. The network is trained using backpropagation and gradient descent as for standard artificial neural networks. CNNs typically would have fully connected layers at the end whence the final outputs will be computed.

A. Convolutional layers:

In the convolutional layers the activations from the previous layers with skin images are convolved with a set of small parameterized filters, collected in a tensor W(j,i), where j is the filter number and i is the layer number. Each filter sharing the exact same weights across the whole input there is a drastic reduction in the number of weights that need to be learned. The motivation for this weight-sharing is that features appearing in one part of the image likely also appear in other parts.[9]. The tensor of feature maps is produced by applying all the convolutional filters in all locations.

B. Activation layer:

Through the nonlinear activation functions the feature maps produced in previous step are fed. This makes it possible to detect Skin cancer to approximate almost any nonlinear function by the entire neural network[12]. The activation functions are generally the very simple rectified linear units, or ReLUs, defined as ReLU(z) = max(0, z), or variants like leaky ReLUs or parametric ReLUs. Feeding the feature maps through an activation function produces new tensors, typically also called feature maps.

C. Pooling:

Each feature map produced by feeding the data through one or more skin cancer convolutional layer is then typically pooled in a pooling layer. Pooling operations take small grid regions as input and produce single numbers for each region. The number is usually computed by using the max function (max-pooling) or the average function (average pooling). Since a small shift of the input image results in small changes in the activation maps, the pooling layers gives the CNN some translational invariance[15].

Removing the pooling layers simplifies the skin care network architecture without necessarily sacrificing performance.

D. Dropout regularization:

It is an averaging technique based on stochastic sampling of neural networks. Neurons are randomly removed during training which ends up in slightly different networks for each batch of training data. The weights of the trained network are tuned based on optimization of multiple variations of the network[18].

E. Batch normalization:

Typically activation layers are placed before the batch normalization layers producing normalized activation maps. Inclusion of normalization forces the network to periodically change its activations to zero mean and unit standard deviation as the training batch hits these layers, which speeds up training. This also makes it less dependent on careful parameter initialization. [13]

III. HAIS SOLUTION

HAIS (Health Care AI Solutions) is in the business of providing intelligence and solutions to business, which can save time and cost using transformational technology. We are a team of people who believe that AI can bring a world of change in various fields, with the culmination of technology and domain expertise. We plan to venture into un-tapped potential in various business domain including healthcare, where artificial intelligence can be used to resolve various health care problems.

A. HAIS IT Platform for Early Detection of Skin Cancer

Our solution is focused on early detection of skin cancer with precision for Indian sub-continent and envisions catering to varied business needs that provide flexibility on its adoption, for example –

- Embed AIML capabilities within existing hospital solution,

- Deploy HAIS IT platform as a separate solution,

- Provide mobile interface to enable remote medical counselling.

The steps followed are

- In the primary-care setting were used in large skin section of images. The images acquired are wide-field image, acquired with imaging devices, scanners, cameras, etc.

- Pigmented skin lesions are detected, extracted, and analyzed by an automated system.

- A pre-trained Deep Convolutional Neural Network (DCNN) determines the suspiciousness of individual pigmented lesions and color maps them (green= safe, yellow = consider further inspection, red = requires further inspection or referral to dermatologist).

- Further assessing was done using pigmented lesions

DCNN AI algorithm will be trained on a powerful computer, and trained model will be deployed on the HAIS IT Platform.

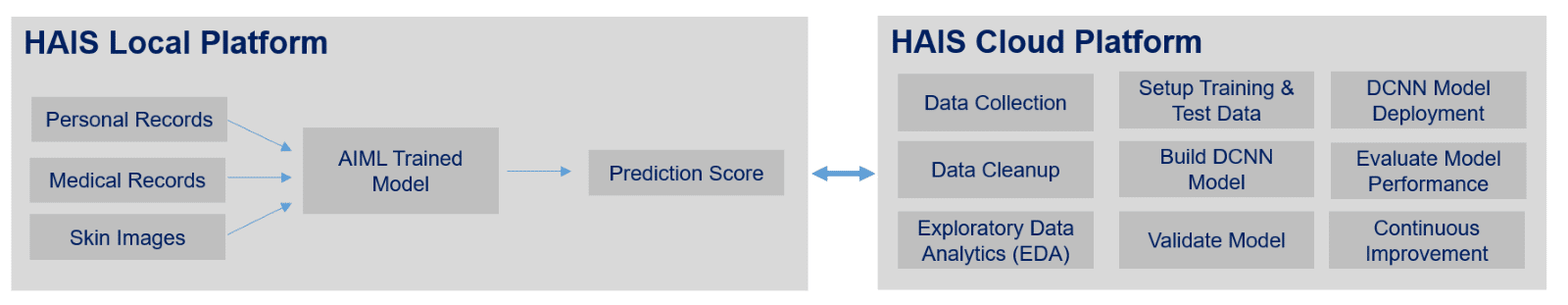

C. HAIS Solution Architecture

Then new images are collected on HAIS IT Platform, the model will perform classification of new data and the model prediction takes place.

B. HAIS Process Flow for interaction between User and Application

The step wise process flow is as below:

- Patient visits to the hospital

- Patient profile is created along with past medical reports and records

- Doctor captures patient’s skin image

- Skin images are uploaded on HAIS IT platform

- HAIS IT platform reads medical reports via robotic process automation module and associates these additional details as a meta-data to the images

- Transformed images are fed into the AIML image processing module

- AIML image processing module returns the outcome of the skin cancer prediction, along with risk levels

- Doctor analysis the outcome to provide more accurate feedback to the patient

The various layers of function as below: -

1. Data Collection Layer: This will be entry point of HAIS IT solution, wherein hospitals and doctors can submit pathological reports, skin images, medical reports, etc using medical or handheld devices.

2. Data Pre-processing Engine: After collecting unstructured data from the data collection layer which may be in one of the following formats, this module will convert it to the required structured dataset for further processing. Irrelevant and redundant data, that can affect the accuracy of the dataset will be removed also..

- Text document, PDFs

- Wide-field images

- Clinical trial data

3. AIML Image Processing (DCNN Algorithm): HAIS Solution will leverage Deep CNN techniques which is a proven technique in image classification. However, applying high-capacity Deep CNN in suspicious pigmented lesions (SPLs) analysis has always been a challenge because of scarcity of labelled data. To overcome it, HAIS solution includes two primary methods:

- First, using Deep CNN and Data Augmentation. a classification model will be prepared to improve performance of classification of skin lesion

- By using Image data augmentation can alleviate the problem of data limitation will. Influence of different number of augmented samples on the performance of different classifiers will be examined.

Our HAIS solution is going to be built on extensive study conducted with our 20+ certified dermatologists for classification and differentiation of skin cancers. Three main types of modalities will be used for the skin lesion classification and diagnosis in our solution: clinical images, dermoscopic images, and histopathology images. The dataset that is publicly available skin lesion has been analyzed to provide different sub-sections dedicated to the artificial intelligence solution related to each type of imaging modality, like:

- International Skin Imaging Collaboration (ISIC) Archive - consists of clinical and dermoscopic skin lesion global datasets

- Interactive Atlas of Dermoscopy - has 1000 clinical cases, each with at least two images: dermoscopic, and close-up clinical

- Asan Dataset - collection of 17,125 clinical images of 12 types of skin diseases found in Asian people

- Cancer Genome Atlas - one of the largest collections of pathological skin lesion slides with 2871 cases[14, 23]

The classification systems available have been evaluated using the large public skin lesion testing dataset, containing approx. 11000 testing images, and 24000 training images, and has obtained performance result with AUC around 89.1%. Our plan is to further refine the DCNN model to enhance its effectiveness. The AI algorithm will be trained on AWS Sagemaker cloud platform and the trained model will be deployed on the HAIS IT Platform.

| Skin Cancer Classes | No. of Images | Evaluation Metric | Algorithm Score |

|---|---|---|---|

| mel, and nv | 1500 | Avg. Precision | 0.637 |

| mel, nv, and sk | 2800 | AUC | 0.874 |

| akiec, bcc, bkl, df, mel, nv, and vasc | 12500 | Balanced Multi-class Accuracy | 0.885 |

| akiec, bcc, bkl, df, mel, nv, scc, and vasc | 35000 | Balanced Multi-class Accuracy | 0.891 |

Abbreviations - akiec is Actinic Keratosis, bcc is Basal Cell Carcinoma, bkl is Benign Keratosis, df is Dermatofibroma, mel is melanoma, nv is Nevi, sk is Seborrheic Keratosis, scc is Squamous Cell Carcinoma, and vasc is Vascular Lesion [16]

4. Visualization and Dashboard:

This visualization layer will provide user friendly dashboard view of the DCNN prediction model confirming malignancy or benign nature of the subject under observation to the end-users viz. doctor, patient, etc.

IV. CONCLUSION AND FUTURE DIRECTIONS

In this paper we have discussed a computer-aided diagnosis system for skin cancer. It can be concluded from the results that the proposed system can be effectively used by dermatologists to diagnose the skin cancer more accurately. This tool is more useful for the patients in Indian subcontinent. The tool is made robust and user friendly so as to serve the purpose of faster diagnostics of the skin cancer.

HAIS plans to provide advanced solutions using technology in Indian healthcare, where artificial intelligence is used to resolve various health care problems. As a company, HAIS focuses to enable disease diagnosis to happen early so that people lead a healthy life. Further to it, HAIS plans to create a digital platform for medical health records for every Indian citizen, that works as a repository for patient health conditions records and can be used as a resource to conduct further research and analysis. As a company we would like to be an integrated solution provider for healthcare industry, where analytics is the key.

V. APPENDIX

Appendix A: Process Flow for Interaction between users and the application (Refer to Fig.3 above).

Appendix B: Business Plan

| Factors | HAIS - IB | HAIS - LB | HAIS - SS |

|---|---|---|---|

| Group | Hospitals | Labs | Skin specialist |

| Plan | Fixed | Fixed | Pay as you use |

| License | 3 | 1 | 1 |

| Registration | User ID | User ID | User ID |

| Annual fee | 12,00,000 | 4,00,000 | Na |

| Monthly Fee | - | - | 5000 |

| Max No. of Free Reviews | 800 | 200 | Na |

| Additional fee per review | 2000 | 3000 | 4000 |

Appendix C: Application development and maintenance cost

(Unit = No. x 12months). Note: All values in INR

| Cost Centre | Cost | Unit | Total |

|---|---|---|---|

| Development cost | |||

| Manpower | |||

| SME | 4,00,000 | 24 | 96,00,000 |

| Dermatologist | 1,00,000 | 240 | 2,40,00,000 |

| Data engineers | 1,00,000 | 72 | 72,00,000 |

| Data scientist | 2,00,000 | 24 | 48,00,000 |

| RPA & Data developer | 1,50,000 | 24 | 36,00,000 |

| Devops | 2,00,000 | 24 | 48,00,000 |

| Business head | 5,00,000 | 12 | 60,00,000 |

| Model Test SME | 1,00,000 | 36 | 36,00,000 |

| Visualization SME | 1,00,000 | 12 | 12,00,000 |

| Data cost | |||

| Data cost | 20,00,000 | 1 | 20,00,000 |

| Sub Total A | 6,68,00,000 | ||

| Infrastructure | |||

| Hardware cost | |||

| Desktops/PCS | 1,00,000 | 30 | 30,00,000 |

| Printers | 50,000 | 3 | 1,50,000 |

| Dermoscopic Scanners | 3,00,000 | 2 | 6,00,000 |

| Local server (development) | 1,50,000 | 1 | 1,50,000 |

| Cloud infra | |||

| Cloud Container and IP (EC2) | 50000 | 6 | 3,00,000 |

| Data Storage (Aurora, S3) | 40,000 | 6 | 2,40,000 |

| Sage-Maker | 25,000 | 6 | 1,50,000 |

| AWS Lambda Functions | 15,000 | 10 | 1,50,000 |

| Security Services (Macie) | 20,000 | 6 | 1,20,000 |

| Software cost (Licence) | 50000 | 30 | 15,00,000 |

| Sub Total B | 63,60,000 | ||

| Administrative | |||

| Management Cost | 5,00,000 | 12 | 60,00,000 |

| Training & Support | 2,00,000 | 12 | 24,00,000 |

| Implementation cost | 10,00,000 | 1 | 10,00,000 |

| Marketing cost | 1,00,00,000 | 1 | 1,00,00,000 |

| Miscellaneous cost | 5,00,000 | 12 | 60,00,000 |

| Sub Total C | 2,54,00,000 | ||

| Total | 9,85,60,000 | ||

VI. REFERENCES

- S. Jain, V. Jagtap, and N. Pise, “Computer aided melanoma skin cancer detection using image processing,” Procedia Comput. Sci., vol. 48, pp. 735–740, 2015.

- Mahbod A, Schaefer C, Ellinger I, Ecker R, Pitiot A, Wang C.Fusing fine-tuned deep features for skin lesion classification. Jan2019.

- Deborah S. Sarnoff, MD., Last updated: January 2021, Skin Cancer 101.

- Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM,et al. Dermatologist-level classification of skin cancer with deepneural networks.

- Haenssle HA, Fink C, Schneiderbauer R, Toberer F, Buhl T,Blum A, et al. Man against machine: diagnostic performance of adeep learning convolutional neural network for dermoscopicmelanoma recognition in comparison to 58 dermatologists.

- Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundushotographs. JAMA 2016

- De Fauw J, Ledsam JR, Romera-Paredes B, Nikolov S, Tomasev N,Blackwell S, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. 2018.

- Qin Y, Kamnitsas K, Ancha S, Nanavati J, Cottrell G, Criminisi A,et al. Autofocus layer for semantic segmentation, (2018).

- N. Darapaneni et al., “COVID 19 severity of pneumonia analysis using chest X rays,” in 2020 IEEE 15th International Conference on Industrial and Information Systems (ICIIS), 2020, pp. 381–386.

- Xiao C, Choi E, Sun J. Opportunities and challenges in developing deep learning models using electronic health records data: a systematic review, 2018

- Alexander Selvikvåg Lundervold, 2019, An overview of deep learning in medical imaging focusing on MRI

- N. Darapaneni et al., “Inception C-net(IC-net): Altered inception module for detection of covid-19 and pneumonia using chest X-rays,” in 2020 IEEE 15th International Conference on Industrial and Information Systems (ICIIS), 2020, pp. 393–398.

- Ioffe S, Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International confer-ence on machine learning. 2015. p. 448–56

- M. Goyal, T. Knackstedt, S. Yan, and S. Hassanpour, “Artificial intelligence-based image classification methods for diagnosis of skin cancer: Challenges and opportunities,” Comput. Biol. Med., vol. 127, no. 104065, p. 104065, 2020.

- N. Darapaneni et al., “Deep convolutional neural network (CNN) design for pathology detection of COVID-19 in chest X-ray images,” in Lecture Notes in Computer Science, Cham: Springer International Publishing, 2021, pp. 211–223.

- S. Haggenmüller et al., “Skin cancer classification via convolutional neural networks: systematic review of studies involving human experts,” Eur. J. Cancer, vol. 156, pp. 202–216, 2021.

- Matt Groh, Caleb Harris,2021, Evaluating Deep Neural Networks Trained on Clinical Images in Dermatology with the Fitzpatrick 17k Dataset

- N. Darapaneni, B. Krishnamurthy, and A. R. Paduri, “Convolution Neural Networks: A Comparative Study for Image Classification,” in 2020 IEEE 15th International Conference on Industrial and Information Systems (ICIIS), 2020, pp. 327–332.

- Breno Krohling, 2021, A Smartphone based Application for Skin Cancer Classification Using Deep Learning with Clinical Images and Lesion Information

- Machine Learning on Mobile: An On-device Inference App for Skin Cancer Detection.

- Kamnitsas K, Baumgartner C, Ledig C, Newcombe V, Simpson J, Kane A, et al. Unsupervised domain adaptation in brain lesion segmentation with adversarial networks, 2017.

- Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. JMach Learn 2014

- Mohamed A. Kassem; Khalid M. Hosny; Mohamed M. Fouad, 2019, Skin Lesions Classification Into Eight Classes for ISIC 2019 Using Deep Convolutional Neural Network and Transfer Learning

- “Using AI to personalize cancer care,” Stanford HAI. [Online]. Available: https://hai.stanford.edu/news/using-ai-personalize-cancer-care-0. [Accessed: 24-Feb-2022].

- N. Darapaneni et al., “American sign language detection using instance-based segmentation,” in 2021 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), 2021, pp. 1–6.