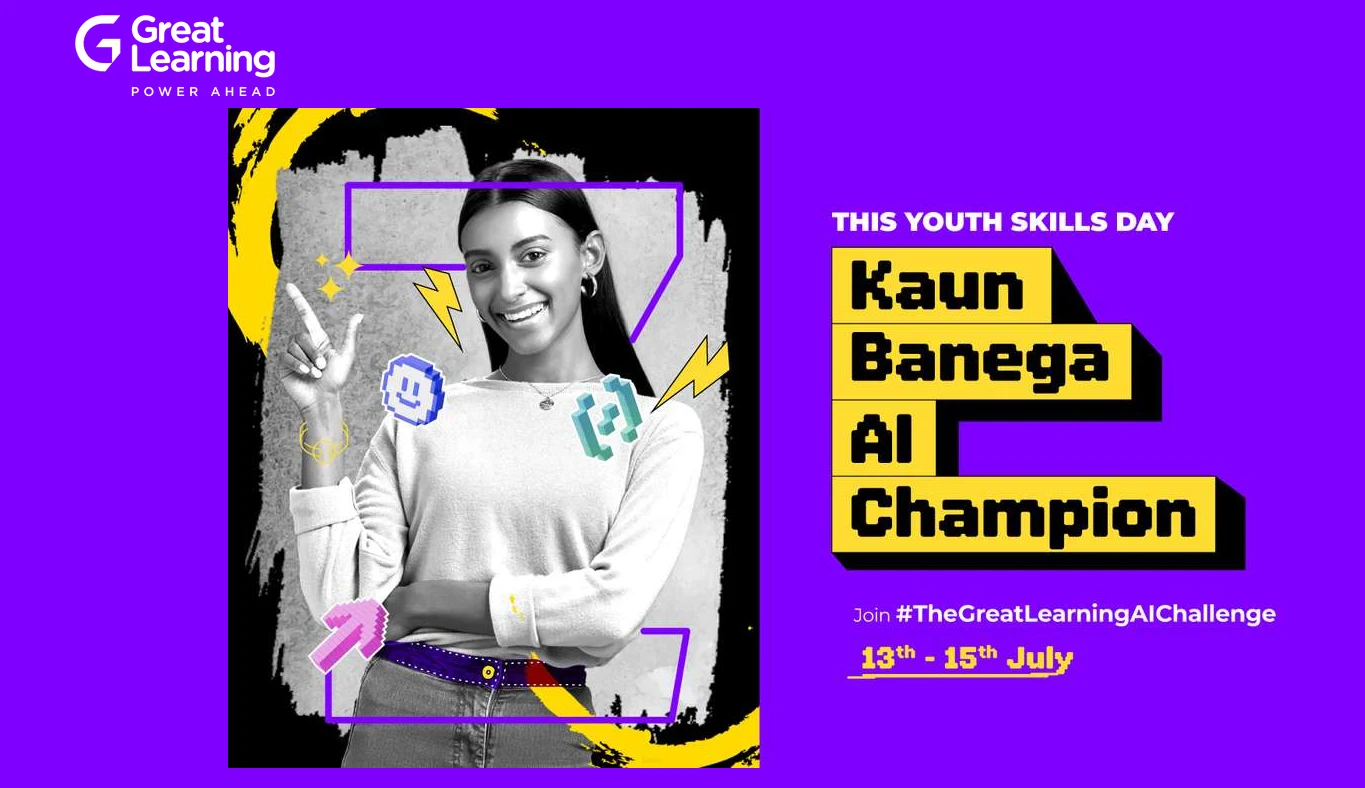

Kaun Banega AI Champion?

Join India’s biggest GenAI challenge! The Great Learning AI Challenge (July 13–15) invites Gen Z to gear up for a 3-day learning sprint where participants race to master cutting-edge GenAI skills. Choose from 30+ courses, complete them, and earn a certificate to showcase on LinkedIn. Register now and sharpen your GenAI expertise!