Linear discriminant analysis is supervised machine learning, the technique used to find a linear combination of features that separates two or more classes of objects or events.

Linear discriminant analysis, also known as LDA, does the separation by computing the directions (“linear discriminants”) that represent the axis that enhances the separation between multiple classes. Also, Linear Discriminant Analysis Applications help you to solve Dimensional Reduction for Data with free Linear Discriminant Analysis Applications.

- Why do we need to reduce dimensions in a data set?

- How to deal with a high dimensional dataset?

- Assumptions of LDA

- How LDA works

- How to Prepare the data for LDA

- Python implementation of LDA from scratch

- Linear Discriminant Analysis implementation leveraging scikit-learn library

Like logistic Regression, LDA to is a linear classification technique, with the following additional capabilities in comparison to logistic regression.

1. LDA can be applied to two or more than two-class classification problems.

2. Unlike Logistic Regression, LDA works better when classes are well separated.

3. LDA works relatively well in comparison to Logistic Regression when we have few examples.

LDA is also a dimensionality reduction technique. As the name implies dimensionality reduction techniques reduce the number of dimensions (i.e. variables or dimensions or features) in a dataset while retaining as much information as possible.

Why do we need to reduce dimensions in a data set?

For some time let’s assume that the world in which we live has one dimension. Finding something in this one-dimensional world is like you start searching for it from one end and head towards another end. You continue till you find the object you started looking for.

In the image below the line represents the dimension and the red circle is representing the object.

Now, if we add another dimension, then it becomes two dimensional. If we attempt to find something in it, relatively it is more complex than it was earlier (one dimensional). Refer to the image below. It helps in explaining the relative complexity it has introduced with the introduction of a new dimension.

In a nutshell, finding something in a smaller dimension is relatively easy in comparison to doing the same in a higher dimension. This could be understood with the help of the phenomenon called “The curse of dimensionality”.

Domains like numerical analysis, statistical sampling, machine learning, data mining and modern databases have a common problem, the increase in dimensionality increases the volume of data.

This further leads to the sparsity of the data and it is a problem for any method involving statistical significance.

In the machine learning landscape, a dataset involving high-dimensional feature space, each feature containing a range of values, typically humongous data is required to undermine the hidden complex patterns within the dataset.

Also Read: Linear Regression in Machine Learning

How to deal with a high dimensional dataset?

There are several ways to deal with high dimensional data, below are few commonly used techniques:

Feature extraction

Feature extraction or feature selection is greatly used in fields of statistical studies and machine learning. Deciding on a feature to be extracted requires a great amount of understanding of the domain and prior knowledge of the subject under consideration. For example, in the field of computer vision imagine that we have a 100X100 pixel image. Then the raw vector intensities become 10000. Often the image corners do not contain much useful information. Dimensionality could be reduced significantly if we compromise on a small amount of information loss by retaining the image pixel located at the central position and dropping the pixels at the corners.

Dimensionality reduction analysis

Statisticians have evolved the methods to automatically reduce the dimensionality. Different methods lead to different reduction results.

1. Principal component analysis: The goal of PCA is to reduce the original high dimensional data to low dimensional space, without losing much vital information. Then features(dimensions) with the largest variance within classes are kept. Redundant and correlated features are dropped.

2. Linear discriminant analysis: The goal of LDA is to discriminate different classes in low dimensional space by retaining the components containing feature values that have the best separation across classes.

Refer below image having visual depiction of the underlying difference between two techniques:

Assumptions of LDA

LDA assumes:

- Each feature (variable or dimension or attribute) in the dataset is a gaussian distribution. In other words, each feature in the dataset is shaped like a bell-shaped curve.

2. Each feature has the same variance, the value of each feature varies around the mean with the same amount on average.

3. Each feature is assumed to be randomly sampled.

4. Lack of multicollinearity in independent features. Increase in correlations between independent features and the power of prediction decreases.

Also Read: Understanding EDA in Python

How LDA works

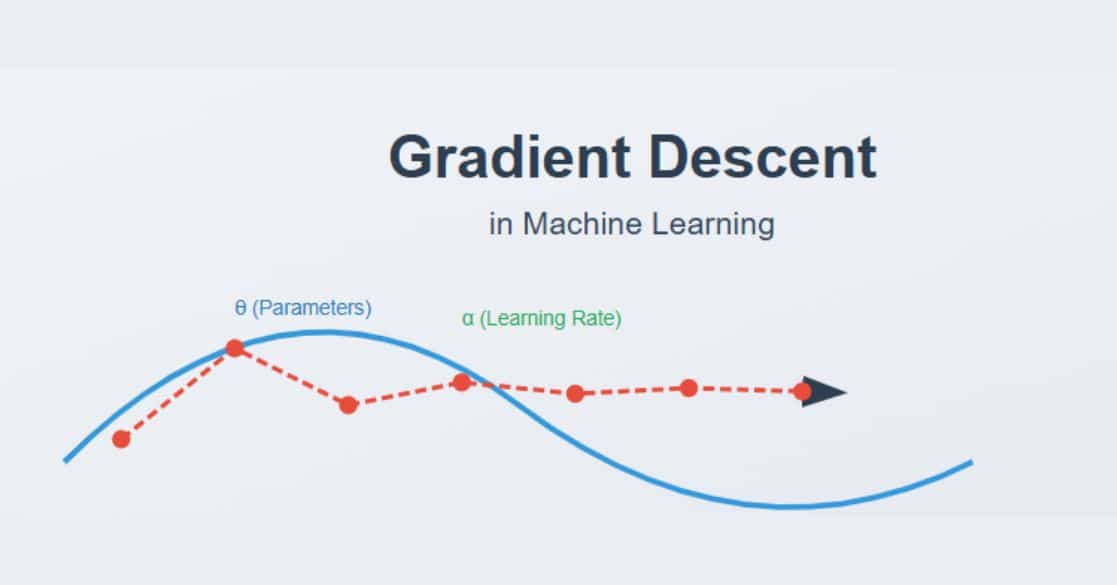

LDA projects features from higher dimension to lower dimension space, how LDA achieves this, let’s look into:

Step#1 Computes mean vectors of each class of dependent variable

Step#2 Computers with-in class and between-class scatter matrices

Step#3 Computes eigenvalues and eigenvector for SW(Scatter matrix within class) and SB (scatter matrix between class)

Step#4 Sorts the eigenvalues in descending order and select the top k

Step#5 Creates a new matrix containing eigenvectors that map to the k eigenvalues

Step#6 Obtains the new features (i.e. linear discriminants) by taking the dot product of the data and the matrix.

How to Prepare the data for LDA

Machine learning model performance is greatly dependent upon how well we pre-process data. Let’s see how to prepare our data before we apply LDA:

1. Outlier Treatment: Outliers from the data should be removed, outliers will introduce skewness and in-turn computations of mean and variance will be influenced and finally, that will have an impact on LDA computations.

2. Equal Variance: Standardization of input data, such that it has a mean 0 and a standard deviation of 1.

3.Gaussian distribution: Univariate analysis of each input feature and if they do not exhibit the gaussian distribution transform them to look like Gaussian distribution(log and root for exponential distributions). Check out free course on multiple variate analysis

Python implementation of LDA from scratch

We will be using Wine data available at the scikit-learn website for our analysis and model building.

Step#1 Importing required libraries in our Jupyter notebook

Step#2 Loading the dataset and separating the dependent variable and independent variable in variables named as “dependentVaraible” and “independentVariables” respectively

Step#3 Let’s have a quick look at our independentVariables.

Step#4 Let’s have a quick look at details of independentVariables in terms of number of rows and columns it contains.

We have 178 records captured against 13 attributes.

Step#5 Let’s have a quick look at details of indepdentVariables

We have 13 columns in the dataset. We could infer that:

- All the features and numeric type and having their respective values in float

- All the 13 features have 178 records and that is enough to conclude that we don’t have any missing values in any of the independent features.

Step#6 Let’s look into the target variable

We can see that our dependentVaraible has got three classes, ‘class_0′ ,’class_1′ and ‘class_2’.We have three class classification problems.

Step#7 Let’s now create data frame having dependent variable and independent variables together

Step#8 Let’s start the fun thing, let’s create feature vector for every class and store it in a variable named “between_class_feature_means”

Step#9 Now, let’s plug the mean mu into the “between_class_feature_means” to get within the class scatter matrix.

Step#10 Now, let’s try to get the linear discriminants value by computing

Step#11 The eigenvectors with the highest eigenvalues carry the most information about the distribution of the data. Now, since we have got the eigenvalues and eigenvector, let’s sort the eigenvalues from highest to lowest and select the first k eigenvectors.

In order to ensure that the eigenvalue maps to the same eigenvector after sorting, we place them in a temporary array.

Step#12 By just looking at the values obtained above it is difficult to determine variance explained by each component. Thus, let’s express it as a percentage.

Step#13 Now, let’s formulate our linear function for the new feature space.

y=X.W

where X is a n×d matrix with n samples and d dimensions, and “y” is a n×k matrix with n samples and k ( k<n) dimensions. In other words, Y is composed of the LDA components (the new feature space).

Step#14 Since, matplotlib cannot handle categorical variables directly and hence let’s encode them. Every class type will now be represented as a number.

Step#15 Final, let’s now plot the data as a function of the two LDA components

In the above plot we can clearly see the separation between all the three classes. Kudos, LDA has done its job, class got linearly separated.

From step#8 to 15, we just saw how we can implement linear discriminant analysis in step by step manner. Hopefully, this is helpful for all the readers to understand the nitty-gritty of LDA.

We can directly arrive to Step#15, by leveraging the offering of Scikit-Learn library. Let’s look into this.

Linear Discriminant Analysis implementation leveraging scikit-learn library

We already have our “dependentVariable” and “independentVariables” defined, let’s use them to get linear discriminants.

Let’s call “explained_variance_ratio_” on our sklearn model definition of Linear Discriminant Analysis

From above output we could see that the LDA#1 covers 68.74% of total variance and LDA#2 covers 31.2% of total remaining variance.

Now, let’s visualize the output of Sklearn implementation-

The results of both implementations are very much alike.

Finally, let’s now use RandomForest for classification model building, any other classifier can also be used.

We are using Random Forest for now.

Let’s check our model performance by printing the confusion matrix

We could see from the above confusion matrix, results are absolutely flawless.

Just to make you realize the magic of LDA on classification, we will again run the test and train split, but here our independent variable component will be “independentVariables” itself instead of using LDA as we did above.

We have defined new variables (X_train1, X_test1, y_train1, y_test1) holding our test and train data for our dependent and independent variables. Please note we have not use “X_lda” but “independentVariables”.

Let’s check the confusion matrix for this new predictions(without linear discriminants)

Above confusion matrix shows one misclassification for Class_2. This shows LDA has certainly added value to the whole exercise.

With this, we have come to an end of our long journey on linear discriminant analysis. We build LDA on the Wine dataset step by step. We started with 13 independent variables and then converged to 2 linear discriminant. Using those two linear discriminant we called Random Forest classifier classifies our test data.

If you found this interesting and wish to learn more, check out this Python Tutorial for beginners and take a look at Linear Programming Examples as well.