- Introduction to Artificial Neural Network

- What is activation function?

- What is ReLU(Rectified Linear Unit) activation function?

- Why is ReLU the best activation function?

- Leaky ReLU activation function

Introduction to Artificial Neural Network

Artificial neural networks are inspired by the biological neurons within the human body which activate under certain circumstances resulting in a related action performed by the body in response. Artificial neural nets consist of various layers of interconnected artificial neurons powered by activation functions which help in switching them ON/OFF. Like traditional machine learning algorithms, here too, there are certain values that neural nets learn in the training phase.

Briefly, each neuron receives a multiplied version of inputs and random weights which is then added with static bias value (unique to each neuron layer), this is then passed to an appropriate activation function which decides the final value to be given out of the neuron. There are various activation functions available as per the nature of input values. Once the output is generated from the final neural net layer, loss function (input vs output)is calculated and backpropagation is performed where the weights are adjusted to make the loss minimum. Finding optimal values of weights is what the overall operation is focusing around.

What is activation function?

As mentioned above, activation functions give out the final value given out from a neuron, but what is activation function and why do we need it?

So, an activation function is basically just a simple function that transforms its inputs into outputs that have a certain range. There are various types of activation functions that perform this task in a different manner, For example, the sigmoid activation function takes input and maps the resulting values in between 0 to 1.

One of the reasons that this function is added into an artificial neural network in order to help the network learn complex patterns in the data. These functions introduce nonlinear real-world properties to artificial neural networks. Basically, in a simple neural network, x is defined as inputs, w weights, and we pass f (x) that is the value passed to the output of the network. This will then be the final output or the input of another layer.

If the activation function is not applied, the output signal becomes a simple linear function. A neural network without activation function will act as a linear regression with limited learning power. But we also want our neural network to learn non-linear states as we give it complex real-world information such as image, video, text, and sound.

What is ReLU Activation Function?

ReLU stands for rectified linear activation unit and is considered one of the few milestones in the deep learning revolution. It is simple yet really better than its predecessor activation functions such as sigmoid or tanh.

ReLU activation function formula

Now how does ReLU transform its input? It uses this simple formula:

f(x)=max(0,x)

ReLU function is its derivative both are monotonic. The function returns 0 if it receives any negative input, but for any positive value x, it returns that value back. Thus it gives an output that has a range from 0 to infinity.

Now let us give some inputs to the ReLU activation function and see how it transforms them and then we will plot them also.

First, let us define a ReLU function

def ReLU(x):

if x>0:

return x

else:

return 0Next, we store numbers from -19 to 19 in a list called input_series and next we apply ReLU to all these numbers and plot them

from matplotlib import pyplot

pyplot.style.use('ggplot')

pyplot.figure(figsize=(10,5))

# define a series of inputs

input_series = [x for x in range(-19, 19)]

# calculate outputs for our inputs

output_series = [ReLU(x) for x in input_series]

# line plot of raw inputs to rectified outputs

pyplot.plot(input_series, output_series)

pyplot.show()ReLU is used as a default activation function and nowadays and it is the most commonly used activation function in neural networks, especially in CNNs.

Why is ReLU the best activation function?

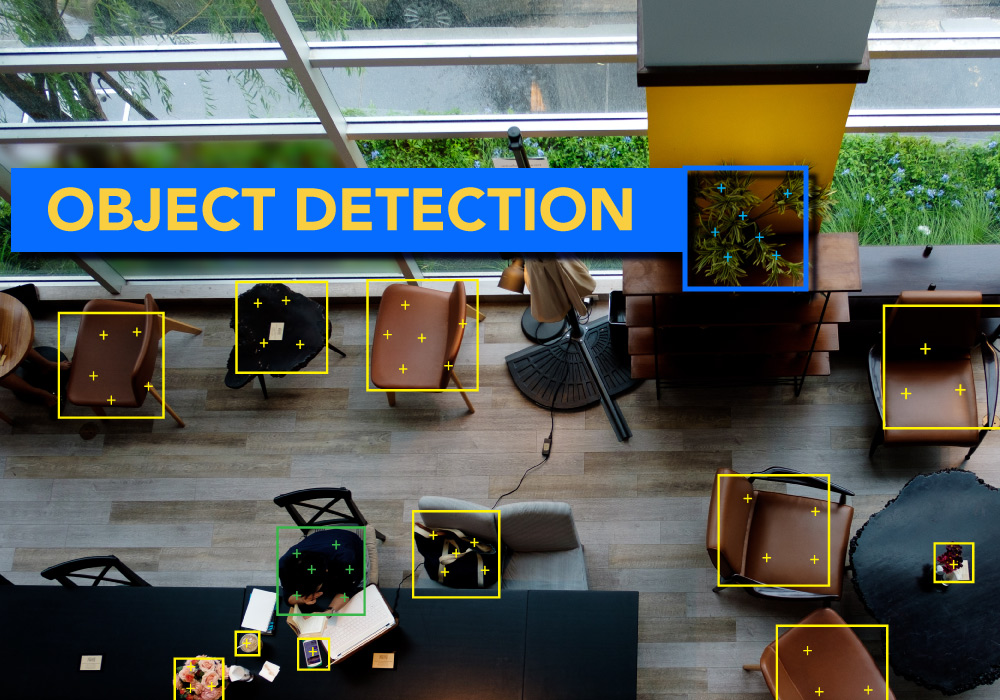

As we have seen above, the ReLU function is simple and it consists of no heavy computation as there is no complicated math. The model can, therefore, take less time to train or run. One more important property that we consider the advantage of using ReLU activation function is sparsity.

Usually, a matrix in which most entries are 0 is called a sparse matrix and similarly, we desire a property like this in our neural networks where some of the weights are zero. Sparsity results in concise models that often have better predictive power and less overfitting/noise. In a sparse network, it’s more likely that neurons are actually processing meaningful aspects of the problem. For example, in a model detecting human faces in images, there may be a neuron that can identify ears, which obviously shouldn’t be activated if the image is a not of a face and is a ship or mountain.

Since ReLU gives output zero for all negative inputs, it’s likely for any given unit to not activate at all which causes the network to be sparse. Now let us see how ReLu activation function is better than previously famous activation functions such as sigmoid and tanh.

The activations functions that were used mostly before ReLU such as sigmoid or tanh activation function saturated. This means that large values snap to 1.0 and small values snap to -1 or 0 for tanh and sigmoid respectively. Further, the functions are only really sensitive to changes around their mid-point of their input, such as 0.5 for sigmoid and 0.0 for tanh. This caused them to have a problem called vanishing gradient problem. Let us briefly see what vanishing gradient problem is.

Neural Networks are trained using the process gradient descent. The gradient descent consists of the backward propagation step which is basically chain rule to get the change in weights in order to reduce the loss after every epoch. It is important to note that the derivatives play an important role in updating of weights. Now when we use activation functions such as sigmoid or tanh, whose derivatives have only decent values from a range of -2 to 2 and are flat elsewhere, the gradient keeps decreasing with the increasing number of layers.

This reduces the value of the gradient for the initial layers and those layers are not able to learn properly. In other words, their gradients tend to vanish because of the depth of the network and the activation shifting the value to zero. This is called the vanishing gradient problem.

ReLU, on the other hand, does not face this problem as its slope doesn’t plateau, or “saturate,” when the input gets large. Due to this reason models using ReLU activation function converge faster.

But there are some problems with ReLU activation function such as exploding gradient. The exploding gradient is opposite of vanishing gradient and occurs where large error gradients accumulate and result in very large updates to neural network model weights during training. Due to this, the model is unstable and unable to learn from your training data.

Also, there is a downside for being zero for all negative values and this problem is called “dying ReLU.”A ReLU neuron is “dead” if it’s stuck in the negative side and always outputs 0. Because the slope of ReLU in the negative range is also 0, once a neuron gets negative, it’s unlikely for it to recover. Such neurons are not playing any role in discriminating the input and is essentially useless. Over time you may end up with a large part of your network doing nothing. The dying problem is likely to occur when the learning rate is too high or there is a large negative bias.

Lower learning rates often alleviate this problem. Alternatively, we can use Leaky ReLU which we will discuss next.

Leaky ReLU activation function

Leaky ReLU function is an improved version of the ReLU activation function. As for the ReLU activation function, the gradient is 0 for all the values of inputs that are less than zero, which would deactivate the neurons in that region and may cause dying ReLU problem.

Leaky ReLU is defined to address this problem. Instead of defining the ReLU activation function as 0 for negative values of inputs(x), we define it as an extremely small linear component of x. Here is the formula for this activation function

f(x)=max(0.01*x , x).

This function returns x if it receives any positive input, but for any negative value of x, it returns a really small value which is 0.01 times x. Thus it gives an output for negative values as well. By making this small modification, the gradient of the left side of the graph comes out to be a non zero value. Hence we would no longer encounter dead neurons in that region.

Now like we did for ReLU activation function, we will give values to Leaky ReLU activation functions and plot them.

from matplotlib import pyplot

pyplot.style.use('ggplot')

pyplot.figure(figsize=(10,5))

# rectified linear function

def Leaky_ReLU(x):

if x>0:

return x

else:

return 0.01*x

# define a series of inputs

input_series = [x for x in range(-19, 19)]

# calculate outputs for our inputs

output_series = [Leaky_ReLU(x) for x in input_series]

# line plot of raw inputs to rectified outputs

pyplot.plot(input_series, output_series)

pyplot.show()If you look carefully at the plot, you would find that the negative values are not zero and there is a slight slope to the line on the left side of the plot.

This brings us to the end of this article where we learned about ReLU activation function and Leaky ReLU activation function. You can click the banner below to get a free deep learning course and enhance your skills.