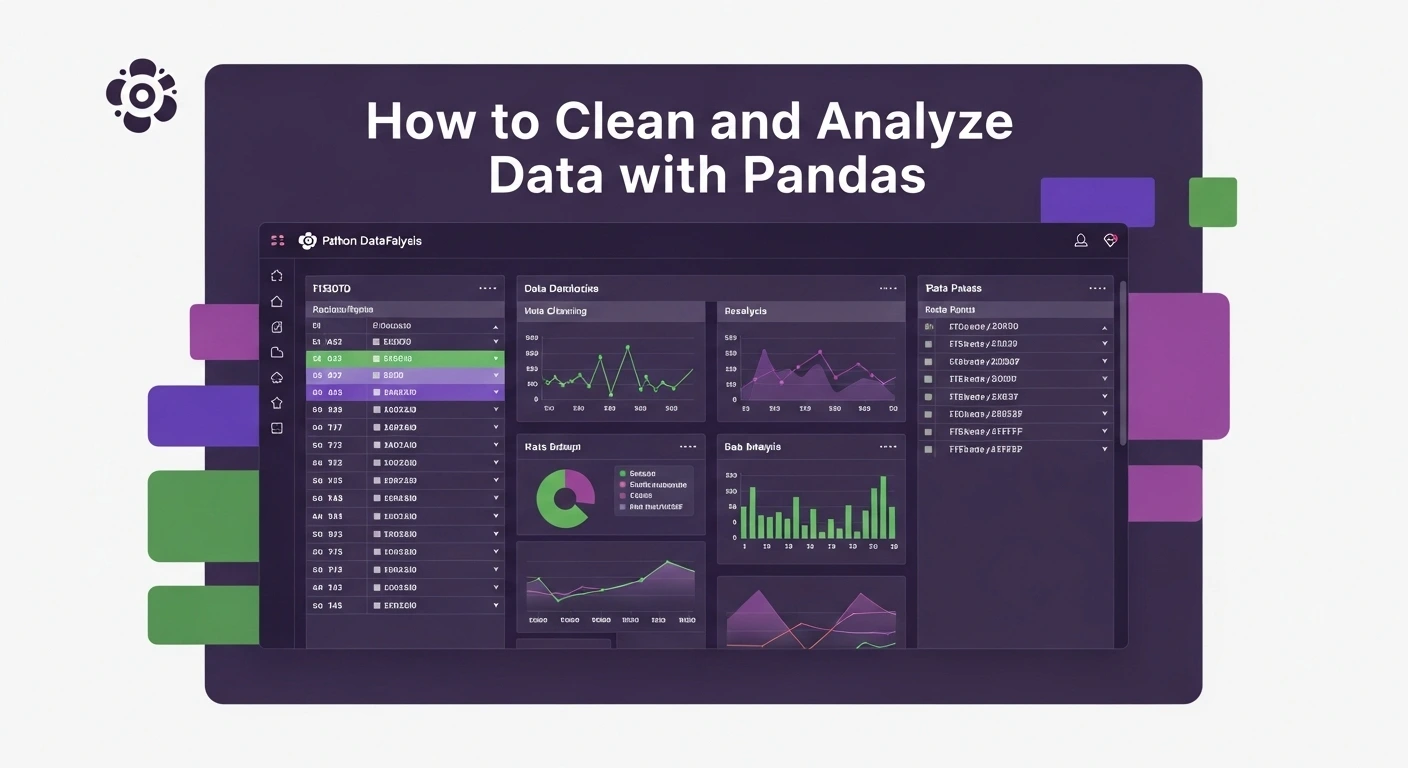

- Why Data Cleaning Matters in Analytics

- Step 1: Importing and Exploring Your Data

- Step 2: Handling Missing Data

- Step 3: Dealing with Duplicates

- Step 4: Fixing Data Types

- Step 5: Standardizing Categorical Data

- Step 6: Outlier Detection and Removal

- Step 7: Renaming Columns for Clarity

- Step 8: Feature Engineering for Analytics

- Step 9: Performing Data Analytics Using Pandas

- Visualization to Support Your Analysis

- Real-World Example: Retail Dataset (with Code and Output)

- Best Practices for Cleaning Data with Pandas

- Conclusion

- Frequently Asked Questions(FAQ’s)

Cleaning and analyzing data is always the step that is going to take the most time whether you are building dashboards, training machine learning models or preparing reports. Pandas is the tool of choice in Python. You can use a few lines of code to clean up messy, unstructured data to give you clean and insightful datasets.

In this tutorial, you will learn how to do pandas data cleaning and perform data analytics using pandas, starting from importing raw data to turning it into clean, useful insights.

Why Data Cleaning Matters in Analytics

Before diving into the code, let’s discuss why cleaning data is crucial.

- Garbage in, garbage out: Dirty data leads to misleading insights.

- Real-world data is messy: missing values, duplicates, and inconsistent formatting are all too common.

- Clean data = Faster analytics: The cleaner your dataset, the quicker you can move on to visualization or modeling.

Consider data cleaning as your analytics pipeline core.

Learn the Pandas Library in Python and how it enables data manipulation and analysis on real-life projects.

Step 1: Importing and Exploring Your Data

import pandas as pd

# Load your dataset

df = pd.read_csv('sales_data.csv')

# Quick peek at your data

print(df.head())

print(df.info())

print(df.describe())

Before starting any project, know the form and the nature of the data you are handling. One of the most useful methods of finding missing values and data types is the .info() method.

Master Data Science with Python Course

Learn Data Science with Python in this comprehensive course! From data wrangling to machine learning, gain the expertise to turn raw data into actionable insights with hands-on practice.

Step 2: Handling Missing Data

One of the most common issues in any dataset is missing values.

# Find missing values

print(df.isnull().sum())

# Drop rows with missing values

df_clean = df.dropna()

# Or fill them with a value

df['Revenue'].fillna(df['Revenue'].mean(), inplace=True)

This is one of the most important steps in python pandas data cleaning. You can drop, fill or interpolate missing values based on your data's type.

Step 3: Dealing with Duplicates

Duplicate rows can skew your analysis, especially when counting or aggregating.

# Check for duplicates

print(df.duplicated().sum())

# Remove duplicates

df = df.drop_duplicates()

This step is simple but crucial in any data cleaning in python pandas workflow.

Step 4: Fixing Data Types

Data often gets imported in incorrect formats. For example, dates may show up as strings.

# Convert a column to datetime

df['Order Date'] = pd.to_datetime(df['Order Date'])

# Convert price from string to float

df['Price'] = df['Price'].str.replace('$', '').astype(float)

You may need to convert the data types in such scenarios. You can check this Python data types guide for more.

Step 5: Standardizing Categorical Data

Inconsistent formatting can cause serious problems during groupings or aggregations.

# Standardize case

df['Category'] = df['Category'].str.lower().str.strip()

# Replace inconsistent labels

df['Category'] = df['Category'].replace({'electronics': 'electronic'})

This type of pandas cleaning data ensures your categorical variables are uniform and reliable.

Step 6: Outlier Detection and Removal

Outliers can distort averages and trends in your analysis. You need to identify and treat such anomalies during data cleaning.

# Using IQR method

Q1 = df['Revenue'].quantile(0.25)

Q3 = df['Revenue'].quantile(0.75)

IQR = Q3 - Q1

# Filter out outliers

df = df[~((df['Revenue'] < (Q1 - 1.5 * IQR)) | (df['Revenue'] > (Q3 + 1.5 * IQR)))]

This ensures the integrity of your statistical summaries and visualizations.

Step 7: Renaming Columns for Clarity

Readable column names make your code and analysis more understandable.

df.rename(columns={

'Order Date': 'order_date',

'Customer ID': 'customer_id'

}, inplace=True)

This small step in cleaning data with pandas pays off big during collaboration or documentation.

Step 8: Feature Engineering for Analytics

With clean data in hand, you can now derive new insights.

# Create new column

df['profit_margin'] = (df['Revenue'] - df['Cost']) / df['Revenue']

# Extract date parts

df['order_month'] = df['order_date'].dt.month

Feature engineering bridges the gap between raw data and powerful analytics.

Step 9: Performing Data Analytics Using Pandas

Once your data is clean, it’s time to analyze:

# Grouping and aggregation

monthly_sales = df.groupby('order_month')['Revenue'].sum()

# Pivot tables

pivot = df.pivot_table(values='Revenue', index='Region', columns='Product Category', aggfunc='sum')

These are your first steps into data analytics using pandas, where patterns and insights begin to emerge.

Visualization to Support Your Analysis

Pandas integrates well with Matplotlib and Seaborn for data visualization.

import matplotlib.pyplot as plt

monthly_sales.plot(kind='bar', title='Monthly Revenue')

plt.xlabel('Month')

plt.ylabel('Revenue')

plt.show()

Visualizing clean data ensures your insights are easy to communicate.

Real-World Example: Retail Dataset (with Code and Output)

We’re analyzing sales data for a retail chain using Pandas. The file retail_sales.csv includes columns like Date, Region, Product Type, Revenue, and Cost.

Step 0: Sample retail_sales.csv File (Input Data)

| Date | Region | Product_Type | Revenue | Cost |

| 2024-01-05 | North | Electronics | 15000 | 10000 |

| 2024-03-10 | South | Furniture | 18000 | 12000 |

| 2024-07-18 | East | Clothing | 8000 | |

| 2023-11-23 | West | Clothing | 22000 | 15000 |

| 2024-05-12 | North | Furniture | 17500 | 11000 |

| East | Electronics | 20000 | 14000 |

Step 1: Load the Data

import pandas as pd

df = pd.read_csv('retail_sales.csv')

print(df)

Output:

| Date | Region | Product_Type | Revenue | Cost |

| 2024-01-05 | North | Electronics | 15000.0 | 10000 |

| 2024-03-10 | South | Furniture | 18000.0 | 12000 |

| 2024-07-18 | East | Clothing | NaN | 8000 |

| 2023-11-23 | West | Clothing | 22000.0 | 15000 |

| 2024-05-12 | North | Furniture | 17500.0 | 11000 |

| NaN | East | Electronics | 20000.0 | 14000 |

Step 2: Remove Rows with Missing Revenue

df = df[df['Revenue'].notnull()]

Output:

| Date | Region | Product_Type | Revenue | Cost |

| 2024-01-05 | North | Electronics | 15000.0 | 10000 |

| 2024-03-10 | South | Furniture | 18000.0 | 12000 |

| 2023-11-23 | West | Clothing | 22000.0 | 15000 |

| 2024-05-12 | North | Furniture | 17500.0 | 11000 |

| NaN | East | Electronics | 20000.0 | 14000 |

Step 3: Convert 'Date' to DateTime Format

df['Date'] = pd.to_datetime(df['Date'], errors='coerce')

Output:

| Date | Region | Product_Type | Revenue | Cost |

| 2024-01-05 | North | Electronics | 15000.0 | 10000 |

| 2024-03-10 | South | Furniture | 18000.0 | 12000 |

| 2023-11-23 | West | Clothing | 22000.0 | 15000 |

| 2024-05-12 | North | Furniture | 17500.0 | 11000 |

| NaT | East | Electronics | 20000.0 | 14000 |

Step 4: Filter Transactions for 2024

df_2024 = df[df['Date'].dt.year == 2024]

Output:

| Date | Region | Product_Type | Revenue | Cost |

| 2024-01-05 | North | Electronics | 15000.0 | 10000 |

| 2024-03-10 | South | Furniture | 18000.0 | 12000 |

| 2024-05-12 | North | Furniture | 17500.0 | 11000 |

Step 5: Group Sales by Region and Product Type

grouped_sales = df_2024.groupby(['Region', 'Product_Type'])['Revenue'].sum().reset_index()

print(grouped_sales)

Output:

| Region | Product_Type | Revenue |

| North | Electronics | 15000.0 |

| North | Furniture | 17500.0 |

| South | Furniture | 18000.0 |

Step 6: Create Profit Margin Column

df_2024['profit_margin'] = (df_2024['Revenue'] - df_2024['Cost']) / df_2024['Revenue']

Output:

| Region | Product_Type | Revenue | Cost | Profit Margin |

| North | Electronics | 15000.0 | 10000 | 0.333333 |

| South | Furniture | 18000.0 | 12000 | 0.333333 |

| North | Furniture | 17500.0 | 11000 | 0.371429 |

Final Cleaned & Enriched Dataset

print(df_2024[['Region', 'Product_Type', 'Revenue', 'Cost', 'profit_margin']])

Output:

| Region | Product_Type | Revenue | Cost | Profit Margin |

| North | Electronics | 15000.0 | 10000 | 0.333333 |

| South | Furniture | 18000.0 | 12000 | 0.333333 |

| North | Furniture | 17500.0 | 11000 | 0.371429 |

This kind of cleaning enables deep and actionable analytics using pandas.

Best Practices for Cleaning Data with Pandas

- Always back up your raw dataset before cleaning.

- Use inplace=False when testing changes to avoid data loss.

- Chain methods with caution, readability matters.

- Validate data after cleaning using .describe(), .value_counts(), and .info().

Conclusion

High-quality analytics is based on effective pandas data cleaning. With methods such as missing value imputation, standardization, and outlier removal, you set your data up to be understood in a powerful way. Clean data will allow you to use data analytics to its fullest extent by producing reports, dashboards, and predictive models with certainty through pandas.

Begin implementing these steps in your next project and feel the difference that clean data makes to speed up your analytics process.

Frequently Asked Questions(FAQ’s)

1. How do I handle inconsistent column names when importing multiple CSV files?

When the data of different sources are included, the column names might be a bit different (e.g. "Revenue" vs. "revenue" or "Sales_Revenue"). You can standardize them with:

# Clean column names: remove extra spaces, convert to lowercase, and replace spaces with underscores

df.columns = df.columns.str.strip().str.lower().str.replace(' ', '_')

This will make them consistent in regards to merging or concatenating multiple DataFrames.

2. What is the difference between apply() and map() in pandas to transform data?

- map() is applied to Series only and is effective when working with element-wise operations, which are often dictionaries or functions.

- apply() apply() may be applied to Series or DataFrames and is more general, particularly when transforming row-wise or column-wise.

Example:

df['Category'] = df['Category'].map({'elec': 'electronics'})

df['NewCol'] = df.apply(lambda row: row['Revenue'] - row['Cost'], axis=1)

3. How can I log data cleaning steps for reproducibility and audit?

Maintain a data cleaning notebook (Jupyter/Colab) with markdown comments and versioned scripts, via the Python logging module. You can also track changes using a DataFrame.diff() or exporting checkpoints.

4. Is there a way to detect and correct encoding issues in pandas when reading CSVs?

Yes. There are cases in which CSV including special characters cannot load correctly. Use:

df = pd.read_csv('file.csv', encoding='utf-8') # or 'ISO-8859-1', 'latin1'

If the text looks weird or unreadable, try changing the encoding until it looks normal.

5. How can I validate that cleaned data aligns with business rules or domain logic?

Pandas themselves will not help to detect domain-specific problems. Design your validation:

# Check for negative revenue

assert (df['Revenue'] >= 0).all(), "Negative revenue found"

# Check valid date ranges

assert df['order_date'].between('2023-01-01', '2025-12-31').all()

These checks are needed to ensure data integrity, not just in terms of type and format.