- Multivariate Analysis Overview

- What is Multivariate Analysis?

- The Evolution of Multivariate Analysis

- Multivariate Analysis: An Overview

- Key Applications of Multivariate Analysis

- Advantages and Disadvantages of Multivariate Analysis

- Classification Chart of Multivariate Techniques

- Comprehensive Model Building Process

- Key Multivariate Techniques

- Model Building Process in Multivariate Analysis

- Conclusion

- Multivariate Analysis FAQs

Multivariate Analysis Overview

Multivariate Analysis (MVA) is a powerful statistical method that examines multiple variables to understand their impact on a specific outcome. This technique is crucial for analyzing complex data sets and uncovering hidden patterns across diverse fields such as weather forecasting, marketing, and healthcare.

By exploring the relationships between several variables at once, MVA provides deeper insights and more accurate predictions, enhancing decision-making in data-driven industries.

In this blog, we’ll explore the foundations, applications, and methods of multivariate analysis, highlighting its significance in modern data analysis.

What is Multivariate Analysis?

Multivariate Analysis (MVA) is an essential statistical process used to understand the impact of multiple variables on a single outcome.

This technique is fundamental in fields where complex interrelationships among data need to be studied, such as in weather forecasting where variables like temperature, humidity, and wind speed all influence weather conditions.

Also, take up a Multivariate Time Series Forecasting In R to learn more about the concept.

The Evolution of Multivariate Analysis

Multivariate analysis has a rich history, beginning with John Wishart’s 1928 paper on sample covariance matrices.

Over the decades, significant developments were made by statisticians like R.A. Fischer and Hotelling, initially applying these methods in psychology, education, and biology.

The mid-20th century saw a technological boom with the advent of computers, expanding the application of multivariate analysis into new fields such as geology and meteorology. This period marked a shift from theoretical exploration to practical, computational applications.

Multivariate Analysis: An Overview

Watch this youtube video for better understanding of Multivariate Analysis. It will provide you with an overview of the analysis.

Key Applications of Multivariate Analysis

The versatility of multivariate analysis is evident across various sectors:

- Healthcare: During the COVID-19 pandemic, multivariate analysis was crucial in forecasting disease spread and outcomes based on multiple health and environmental variables.

- Real Estate: Analysts use multivariate analysis to predict housing prices based on factors such as location, local infrastructure, and market conditions.

- Marketing: Techniques like conjoint analysis help determine consumer preferences and the trade-offs consumers make between different product attributes.

Advantages and Disadvantages of Multivariate Analysis

Advantages

- The main advantage of multivariate analysis is that since it considers more than one factor of independent variables that influence the variability of dependent variables, the conclusion drawn is more accurate.

- The conclusions are more realistic and nearer to the real-life situation.

Disadvantages

- The main disadvantage of MVA includes that it requires rather complex computations to arrive at a satisfactory conclusion.

- Many observations for a large number of variables need to be collected and tabulated; it is a rather time-consuming process.

Classification Chart of Multivariate Techniques

Selection of the appropriate multivariate technique depends upon-

a) Are the variables divided into independent and dependent classification?

b) If Yes, how many variables are treated as dependents in a single analysis?

c) How are the variables, both dependent and independent measured?

Multivariate analysis technique can be classified into two broad categories viz., This classification depends upon the question: are the involved variables dependent on each other or not?

If the answer is yes: We have Dependence methods.

If the answer is no: We have Interdependence methods.

Dependence technique: Dependence Techniques are types of multivariate analysis techniques that are used when one or more of the variables can be identified as dependent variables and the remaining variables can be identified as independent.

Also Read: What is Big Data Analytics?

Comprehensive Model Building Process

Model building in multivariate analysis involves several critical steps:

- Defining the Problem: Clearly defining what you need to solve or understand is the first step in multivariate analysis.

- Data Collection: Gathering adequate data for all variables considered relevant to the analysis.

- Data Preparation: Ensuring data cleanliness and proper format for analysis.

- Choosing the Right Model: Selecting a statistical model that fits the data and problem. This might include regression analysis, conjoint analysis, or discriminant analysis, depending on the complexity and nature of the problem.

- Model Testing and Validation: Rigorous testing to validate the model’s assumptions and its predictive power.

- Implementation and Monitoring: Applying the model in real-world scenarios and continually monitoring its effectiveness.

Key Multivariate Techniques

Below is a tabular overview of the primary multivariate analysis techniques, detailing their purposes and typical applications:

| Technique | Purpose | Typical Applications |

| Multiple Regression Analysis Also Read: Linear Regression in Machine Learning | Multiple regression is an extension of simple linear regression. It is used when we want to predict the value of a variable based on the value of two or more other variables. The variable we want to predict is called the dependent variable (or sometimes, the outcome, target, or criterion variable). Multiple regression uses multiple “x” variables for each independent variable: (x1)1, (x2)1, (x3)1, Y1) | Used in economics, business, environmental science to forecast outcomes based on several influencing factors. |

| Conjoint Analysis | To determine how people value different attributes of a product or service. | Market research to guide product design, pricing strategies, and marketing tactics. |

| Multiple Discriminant Analysis | To differentiate and classify data into distinct groups.The discriminant equation: F = β0 + β1X1 + β2X2 + … + βpXp + ε where, F is a latent variable formed by the linear combination of the dependent variable, X1, X2,… XP is the p independent variable, ε is the error term and β0, β1, β2,…, βp is the discriminant coefficients. | Used in finance, marketing, and biology for classification based on patterns. |

| Linear Probability Model (LPM) | To predict binary outcomes from one or more explanatory variables. | Used in medical statistics, economics, and social sciences to predict occurrences like disease presence or election outcomes. |

| Multivariate Analysis of Variance (MANOVA) | Multivariate Analysis of Variance (MANOVA) is an advanced form of ANOVA that evaluates differences between group means across multiple dependent variables at once. While ANOVA looks at one response variable, MANOVA can handle two or more, using a similar mathematical approach but with more complex comparisons. | Comparing and contrasting group means in psychology, education, and other research fields where multiple outputs are analyzed. |

| Canonical Correlation Analysis (CCA) | To explore relationships between two sets of variables. | Used in behavioral sciences and ecology to study the interdependencies between different sets of variables. |

| Structural Equation Modeling (SEM) | To analyze structural relationships between measured variables and latent constructs. | Used in social sciences, marketing, and product management to assess complex models of causal relationships. |

| Factor Analysis | To reduce the number of variables in a dataset by grouping them into factors. | Data reduction in psychology, market research, and other fields where large sets of data need to be simplified. |

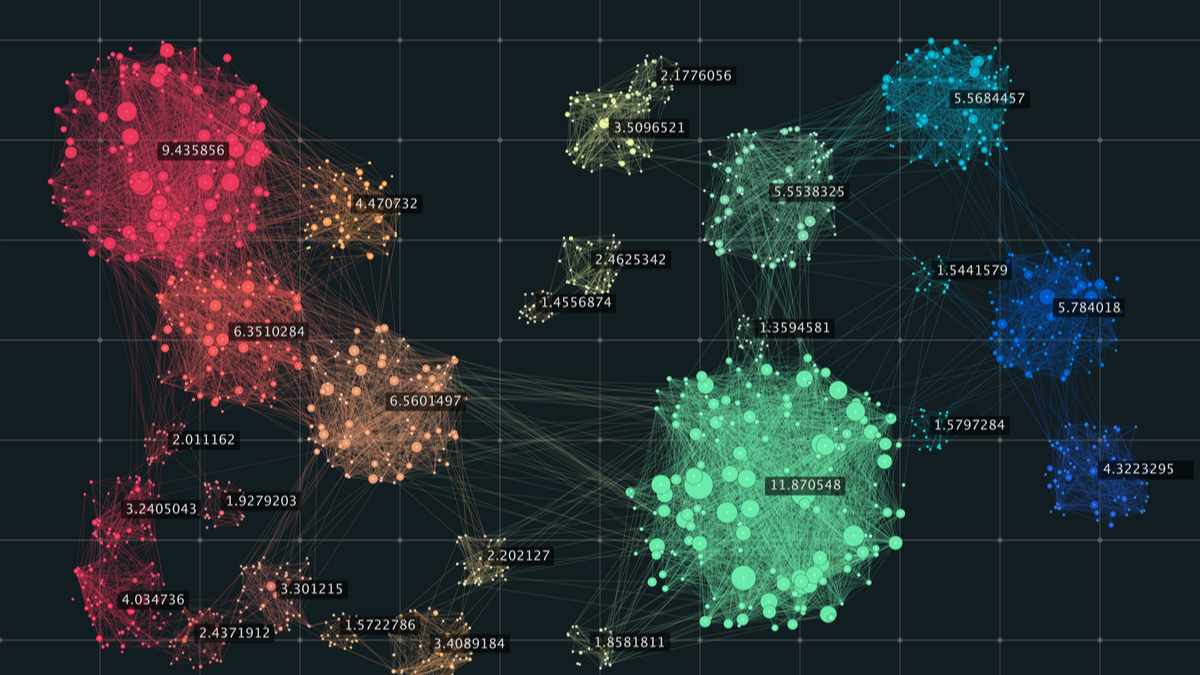

| Cluster Analysis | To classify objects or cases into relative groups called clusters based on their similarity. | Market segmentation, biology for grouping species, and any other field requiring classification without prior labels. |

| Multidimensional Scaling (MDS) | To visualize the distances or dissimilarities among data points. | Used in marketing to map consumer tastes and perceptions, and in psychology to map perceptual distances among stimuli. |

| Correspondence Analysis | To visualize and analyze the patterns in categorical data. | Analyzing data from surveys, such as brand switching data and other types of categorical data analysis. |

Model Building Process in Multivariate Analysis

Building a model in multivariate analysis involves several critical steps:

- Defining Objectives: Clearly stating what you want to achieve with the analysis.

- Choosing Techniques: Selecting the appropriate statistical techniques based on the data and objectives.

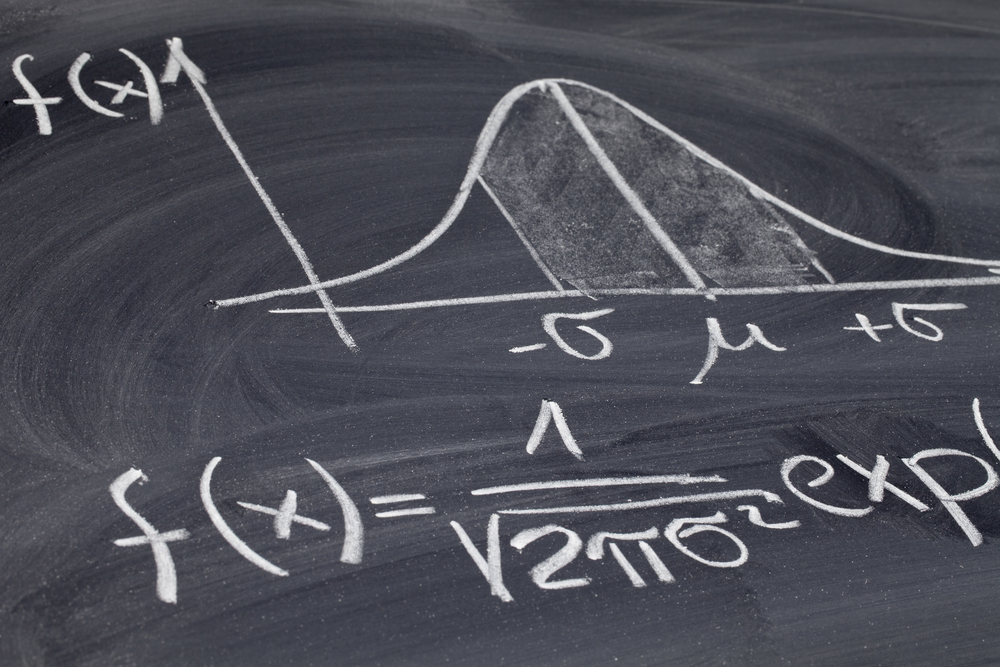

- Assumption Testing: Checking assumptions like normality, homoscedasticity, linearity, and absence of multicollinearity.

- Model Estimation and Validation: Estimating the model parameters and validating the model through various diagnostic tests.

- Interpretation: Drawing conclusions from the model and applying these insights to make informed decisions.

Advantages Over Univariate and Bivariate Analysis

Unlike univariate or bivariate analysis, which examine one or two variables respectively, multivariate analysis provides a more comprehensive view by considering multiple variables simultaneously. This holistic approach leads to insights that are more detailed and closely aligned with the complexities of real-world scenarios.

Conclusion

Multivariate analysis is a powerful tool that, when applied correctly, can offer deep insights into complex data sets across various industries.

It enables analysts to make more informed decisions, predict future trends, and optimize outcomes based on a thorough understanding of the interplay between multiple factors.

For professionals looking to enhance their analytical skills, learning multivariate analysis through structured courses and hands-on practice is highly recommended.

If you are a beginner in the field of data science and wish to kick-start your career, taking up free online courses can help you grasp the introductory concepts in a comprehensive manner. Great Learning Academy offers a Data Science Foundations Free Online Course that can help you become job-ready.

Some of the skills you will gain by the end of the course are linear programming, hands-on experience, and analytics landscape.

Multivariate Analysis FAQs

Three categories of multivariate analysis are: Cluster Analysis, Multiple Logistic Regression, and Multivariate Analysis of Variance.

Multivariate analysis is helpful in effectively minimizing the bias.

Multivariate refers to multiple dependent variables that result in one outcome. This means that a majority of our real-world problems are multivariate. For example, based on the season, we cannot predict the weather of any given year. Several factors play an important role in predicting the same. Such as, humidity, precipitation, pollution, etc.

There are several applications of multivariate analysis. It allows us to handle a huge dataset and discover hidden data structures that contribute to a better understanding and easy interpretation of data. There are various multivariate techniques that can be selected depending on the task at hand.

Multivariate analysis talks about two or more variables. It analyses which ones are correlated with a specific outcome. Whereas bivariate analysis talks about only two paired datasets and studies whether there is a relationship between them.