- Reasons for learning linear algebra before machine learning

- How to get started with Linear Algebra for Machine Learning?

- Linear Algebra Notation

- Linear Algebra Arithmetic

- Linear Algebra for Statistics

- Matrix Factorization

- Linear least squares

- Examples of Linear Algebra in Machine Learning

- Linear Algebra Books

- FAQs

- Recommendation System

- Our Machine Learning Courses

Linear algebra is the study of vector spaces, lines and planes, and mappings that are used for linear transforms. It was initially formalized in the 1800s to find the unknowns in linear equations systems, and hence it is relatively a young field of study. Linear Algebra is an essential field of mathematics that can also be called the mathematics of data.

Linear Algebra is undeniably an important part of the application for the process of machine learning, but many recommend it as a prerequisite before a Data Scientist starts to apply the concept of Machine Learning. This advice in itself can be considered wrong because it is not until the application of the concept that one needs to learn the various concepts of Linear Algebra.

In this article, you will discover why machine learning practitioners should study linear algebra to improve their skills and capabilities as practitioners. After reading this blog, you will know:

- 5 Reasons as to why a deeper understanding of linear algebra is required for intermediate machine learning practitioners.

- Linear algebra can be fun if approached in the right way.

Also Read: Linear Regression in Machine Learning

Reasons for learning linear algebra before machine learning

1. Key to excel

It is undeniable that calculus tops linear algebra when advanced mathematics is taken into consideration. Learning integral and differential calculus acts as fundamental knowledge needed for the applications like tensors and vectors.

Learning linear algebra will help you develop a better understanding of linear equations and linear functions. Giving more time to learn linear algebra will help you with linear programming.

2. Machine Learning Prognostics

Awareness or instinct plays an essential role in machine learning, and these can be improved by learning linear algebra. It will help you widen your thinking, and you will be able to impart more perspectives.

You can begin with setting up different graphs, visualization, using various parameters for diverse machine learning algorithms or taking up things that others around you might find difficult to understand.

3. Creating Better Machine Learning Algorithms

You can construct better supervised and unsupervised learning algorithms with the help of linear algebra.

Supervised learning algorithms that can be created afresh using linear algebra are:

- Logistic Regression

- Linear Regression

- Decision Trees

- Support Vector Machines (SVM)

Unsupervised Learning algorithms for which linear algebra can be used are:

- Single Value Decomposition (SVD)

- Clustering

- Components Analysis

Suppose you take up a project of machine learning to work on. In that case, you can customize different parameters in your project with the help of an in-depth understanding of the concepts provided by linear algebra.

4. Better graphic processing in ML

Images, audio, video, and edge detection are different graphical interpretations provided by machine learning projects that you can work on. The parts of the given data set are trained on the basis of their categories by classifiers provided by machine learning algorithms. These classifiers also remove the errors from the trained data.

At this stage, linear algebra will help in computing the large and complex data set using matrix decomposition techniques. Q-R and L-U are the most popular matrix decomposition methods.

5. Improving Statistics

To organize and integrate data in machine learning, it is essential to know about Statistics. Learning linear algebra can help in understanding the concepts of statistics in a better way. Advanced statistical topics can be integrated using methods, operations, and notations of linear algebra.

How to get started with Linear Algebra for Machine Learning?

We recommend a breadth-first approach if you want to learn Linear Algebra while applying machine learning. We call it the results first approach. This is where you start by first learning to practice a predictive style end-to-end problem using a tool such as sci-kit-learn or Pandas in Python. This process will provide a skeleton as to how you can approach to progressively deepen your knowledge of how an algorithm works, and eventually the math that underlies all of these algorithms.Also check out Uses of pandas in python. Also, check out the probability for machine learning course for free.

“Linear algebra is a branch of mathematics that is widely used throughout science and engineering. However, because linear algebra is a form of continuous rather than discrete mathematics, many computer scientists have little experience with it.” – Page 31, Deep Learning, 2016.

Linear Algebra Notation

You can read and write vector and matrix notation. The algorithm is described in books, papers, and websites using vector and matrix notation. The mathematics of data and notation allows you to accurately describe data management and specific operators. You can read and write this notation. This skill allows you to-

- Read the description of the algorithms in the textbooks

- Description of new methods in research papers

- Briefly describe your methods for other doctors

Programming languages such as Python provide effective ways to implement simplicity and algebraic notation directly. Awareness of marking and how it is perceived in itself. The language or library allows the machine to run small and efficient learning algorithms.

Linear Algebra Arithmetic

Linear algebra includes arithmetic operations with notation sharing. Knowing how to add, subtract, and multiply scalars, vectors, and matrices are essential. A challenge for you is coming to the linear algebra field where operations such as matrix multiplication and Tensor Multiplication does not apply as a direct multiplication of the elements of the structures are, and at first glance, incomprehensible.

Then, most if not all of these functions are effectively implemented and provided by the API Call in Modern Linear Algebra Libraries. Besides, understanding of vector and matrix operations Matrix notation is implemented and required as part of effective reading and writing.

Also Read: Machine Learning Interview Questions 2020

Linear Algebra for Statistics

To learn statistics you need to learn linear algebra. Especially the multivariate statistics. Mathematics is another pillar area that supports statistics and Machine learning. They are primarily concerned with describing and understanding data. As the mathematics of data, linear algebra has left its fingerprint on many related fields of mathematics, including statistics.

To read and interpret data, you need to learn the notation and operation of Linear algebra. Modern statistics use both notation and tools of linear algebra to describe tools and techniques of statistical methods. Vectors for the ways and types of data, for covariate matrices describing the relationships among several Gaussian variables.

Staples Machine Learning is also the result of some collaboration between the two fields. Methods such as Principal Component Analysis or PCA for short can be used for data limitations.

Matrix Factorization

The structure of notation and arithmetic is the idea of matrix factorization, also known as Matrix Decomposition. You need to know how to validate the matrix and what it means. The Matrix Rationalization is an important tool in linear algebra and is widely used as a complex concept.

Linear algebra (Eg, matrix inversion), and machine learning.

Also, there is a range of different matrix factors, each with varying strengths and capabilities, some of which you may recognize as “machine learning” techniques, such as SVD for single-value decomposition or lack of data. To Read and explain high-order matrix operations, you need to understand the matrix factor.

Linear least squares

You need to know how to use the matrix factor to solve linear least squares. Linear algebra was originally developed to solve systems of linear equations. These are the equations where there are more equations than unknown variables. As a result, they are challenging to solve arithmetically because there is no single solution. After all, there is no line or the plane is not sufficient to fit the required data without some error. These types of problems can be formulated as a minimization of squares.

Examples of Linear Algebra in Machine Learning

1. Datasets and data files

In machine learning, you fit the model in the dataset. It’s a table like a set of numbers where each row represents the observation and each column represents the characteristic of the observation.

Below is a fragment of the Iris Flower Dataset 1

5.1,3.5,1.4,0.2,Iris-setosa

4.9,3.0,1.4,0.2,Iris-setosa

4.7,3.2,1.3,0.2,Iris-setosa

4.6,3.1,1.5,0.2,Iris-setosa

5.0,3.6,1.4,0.2,Iris-setosa

…

These data are the main data structure in a matrix, linear algebra. Yet, when you are partitioning data into inputs and outputs to match supervised machine learning models for measuring and flowering species, you have a matrix (X) and a vector (Y).

Vector is another important data structure in linear algebra. Each row has the same length, i.e., the same number of columns, therefore we can say that the data is vectorized where rows can be provided to a model one at a time or in batch and the model can be pre-configured to expect rows of a fixed width.

2. Images and photos

Perhaps, they are accustomed to working with images or photographs in computer vision applications.

Each image you work with is a table structure with width and height and pixel value in each cell for black and white images or 3-pixel values per cell per color image. A photo is another example of a matrix from linear algebra. Operations on the image, such as cropping, scaling, shearing, and so on are all described using the notation and operations of linear algebra.

3. One Hot Encoding

Sometimes you work with classified data in machine learning. Perhaps the class labels for classification problems, or perhaps categorical input variables. It is common to encode categorical variables to make it easier to work with and learn by some techniques. A popular encoding for categorical variables is the one-hot encoding. A one-hot encoding is where a table is created to represent the variable with one column for each category and a row for each example in the dataset. A check or one-value is added in the column for the categorical value for a given row, and a zero-value is added to all other columns. For example, the variable color variable with the 3 rows:

Red

Green

Blue

It can be encoded as follows:

Red, green, blue

1, 0, 0

0, 1, 0

0, 0, 1

Each row is encoded as a binary vector, a vector with zero or one values and this is an example of sparse representation, a whole sub-field of linear algebra.

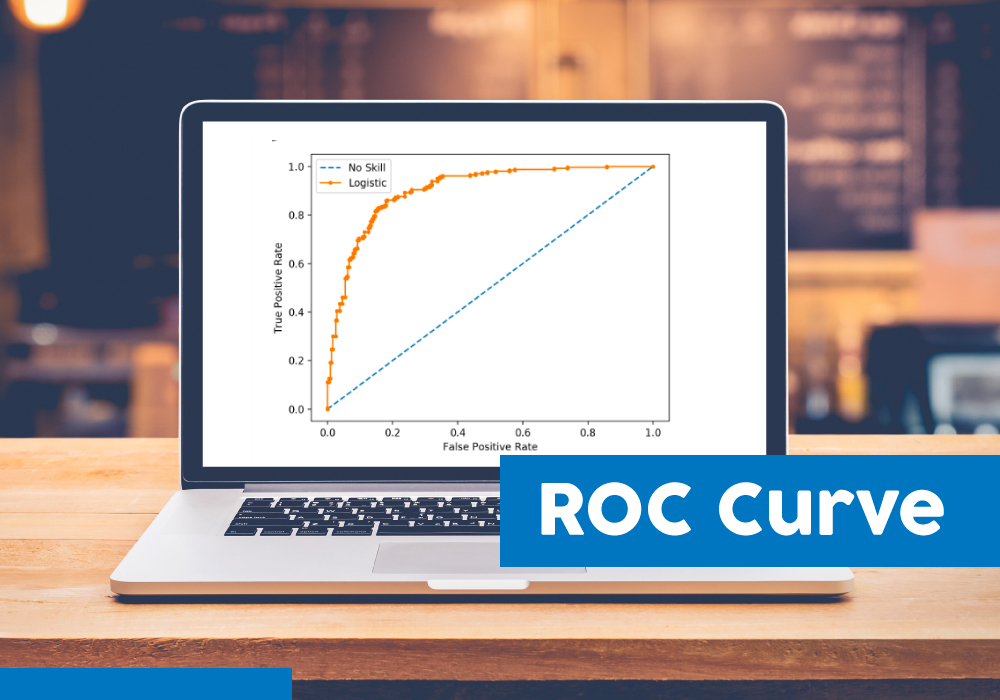

4. Linear Regression

Linear regression is an old-fashioned way of interpreting statistics between relationships. It is often used in machine learning to simplify numerical values in simpler regression problems. There are several ways to describe and solve the linear regression problem, which is, to find a set of multiples when each input variable is multiplied and added to the output variable is the best reference together. If you have used a machine learning tool or library, the most common way of solving linear regression is via a least-squares optimization that is solved using matrix factorization methods from linear regression, such as an LU decomposition or a singular-value decomposition or SVD. Even the common way of summarizing the linear regression equation uses linear algebra notation: y = A · b Where y is the output variable A is the dataset and b are the model coefficients.

Regularization

In applied machine learning, we often seek the simplest possible models that achieve the best skill on our problem. Simpler models are often better at generalizing from specific examples to unseen data. In many methods that involve coefficients, such as regression methods and artificial neural networks, simpler models are often characterized by models that have smaller coefficient values. A technique that is often used to encourage a model to minimize the size of coefficients while it is being fit on data is called regularization. Common implementations include the L 2 and L 1 forms of regularization. Both of these forms of regularization are a measure of the magnitude or length of the coefficients as a vector and are methods lifted directly from linear algebra called the vector norm.

- Principal component analysis

Often a dataset has many columns, perhaps tens, hundreds, thousands, or more. Modeling data with many features is challenging, and models built from data that include irrelevant features are often less skillful than models trained from the most relevant data. It is hard to know which features of the data are relevant and which are not. Methods for automatically reducing the number of columns of a dataset are called dimensionality reduction, and perhaps the most popular method is called the principal component analysis or PCA for short. This method is used in machine learning to create projections of high-dimensional data for both visualizations and training models. Introduction to Clustering and PCA course can help you to understand the core of the PCA method is a matrix factorization method from linear algebra. The eigendecomposition can be used and more robust implementations may use the singular-value decomposition or SVD.

Example:

- Single value decomposition

Another popular dimensional reduction method is the single-value decomposition method or SVD in short. According to the matrix and method name, it specified the factorization method from linear algebra. It is widely used in linear algebra and can be used directly in applications such as feature selection, visualization, noise reduction, and more.

- Cryptographic Analysis

In the sub-field of machine learning to work with text data, called natural language processing, it is common to refer to documents as a large matrix of words. For example, columns of the matrix may be words and rows of sentences known in the vocabulary, paragraphs, pages, or documents of text with cells in the matrix marked as count or frequency. It is a small matrix representation of the text. Matrix multiplication techniques such as single-value decomposition can be applied. A small matrix that is very relevant for distillation of representation. It is abstract. Processed documents in this way are easy to compare, query and use. The basis for a supervised machine learning model. This form of data preparation is called latent. LSA for Semantic Analysis or abbreviated as it is also known as Lenten Semantic Indexing or LSI.

Linear Algebra Books

For Beginners:

- Linear Algebra: A Modern Introduction by David Poole

- Linear Algebra Done Right by Sheldon Axler

- Introduction to Linear Algebra by Serge Lang.

- Introduction to Linear Algebra by Gilbert Strang

Application Based:

- Linear Algebra and its Applications by David C. lay

- Numerical Linear Algebra by Lloyd Trefethen.

- Linear Algebra and Its Applications by Gilbert Strang.

- Matrix Computations by Gene Golub and Charles Van Loan

FAQs

1. Is linear algebra required for machine learning?

Ans. You don’t have to learn linear algebra before you get started in machine learning. It would be advisable to improve your linear algebra if you wish to learn Machine Learning in deep. Learning Linear Algebra will not only help you in understanding other areas of mathematics but will also help you in developing a better understanding of machine learning algorithms.

2. Is Linear Algebra good for programming?

As the most widely used optimization is linear programming and the most commonly used application for linear algebra is optimization; therefore it is useful to learn linear algebra. Using linear programming, you can even optimize your diet, budgets or daily travelling route.

3. How is linear algebra used in data science?

You will be able to have a better understanding of machine learning and deep learning algorithms after having a good understanding of linear algebra. You wouldn’t treat machine learning and deep learning algorithms as black boxes, and you will be able to choose proper hyperparameters and develop a better model.

4. Is Linear Algebra difficult?

Linear algebra requires less brain work than Calculus and is easier than the elementary calculus. linear algebra is often the first actual math course that students ever take up. It is a considerable change of pace from the regular high school mathematics and calculus, therefore, can be difficult for new students. Machanics of it are easy but you may find it hard conceptually.

5. What are the applications of linear algebra?

Some Applications of linear algebra are:

- Least Square approximation

- Traffic Flow

- Electrical Circuits

- Determinant

- Genetics

- Graph Theory

- Cryptography

- Markov Chain

6. What are the applications of linear algebra in computer science?

Applications of linear algebra in computer science are:

- Graphics

- Image processing

- Cryptography

- Machine Learning

- Computer Vision

- Optimization

- Graph-Algorithms

- Quantum Computation

- Information Retrieval

- Web Search

7. How much linear algebra for machine learning?

Learning just the basics of linear algebra are enough to understand how the algorithms are being used. You need to have a strong base of linear algebra and calculus so as to know how things are being done behind the scenes. Sometimes, Machine Learning might be pure linear algebra, involving many matrix operations, and you might find it difficult if you do not have sound knowledge in linear algebra.

Recommendation System

Modeling problems with product recommendations are called machine learning subfield, recommendation systems. Examples recommending customers like on Amazon books are recommended based on previous purchase history.

Also Read: Movie Recommendation System

Recommending movies and TV shows based on your viewing history and viewing subscriber history on platforms like Netflix. The main recommendation is the development of systems about linear algebraic methods. A simple example of calculating equality.

Low customer behavior among distance vectors using distance measurements such as Euclidean distance or dot products. We are matrix-factorial methods such as single-value decomposition.

Find Machine Learning Course in Top Indian Cities

Chennai | Bangalore | Hyderabad | Pune | Mumbai | Delhi NCROur Machine Learning Courses

Explore our Machine Learning and AI courses, designed for comprehensive learning and skill development.

| Program Name | Duration |

|---|---|

| MIT No code AI and Machine Learning Course | 12 Weeks |

| MIT Data Science and Machine Learning Course | 12 Weeks |

| Data Science and Machine Learning Course | 12 Weeks |