Anomaly detection is something similar to how our human brains are always trying to recognize something abnormal or out of the “normal” or the “usual stuff.” Anomaly is basically that does not fit with the usual pattern. Abnormal growth of data or some pattern which is not similar with the other part of the data and our data science concepts and tools are also trying to look for anomalies that do not maintain the normal data flow.

So to understand more intuitively let me give you an example like an “unusually high” number of login attempts to some particular account may point to a potential cyberattack, or a huge amount of transaction with credit card can be a reason for fraud transaction. Also, check put this Fake news detection using machine learning course today.

So In this blog we will discuss about:

- What is Anomaly detection?

- Why do we need Anomaly detection?

- Types of Anomaly detection

- Benefits and applications of Anomaly detection

- Machine learning methods to do anomaly detection

- Machine Learning and Outlier Analysis

- Project with anomaly detection

What is Anomaly Detection?

Anomaly Detection is basically a technique to identify rare events or observations. These events or the observations can be a cause of suspicious activity. Because these observations are statistically different from the rest of the observations. Examples of such activities are known as anomaly in the dataset and these behaviours are known as “anomalous” behaviour and typically the reason for some kind of a problem like a credit card fraud, failing machine in a server, a cyber attack, or some other kind of serious issues.

Why do we need Anomaly Detection?

We all know that Machine Learning has four parts or classes of applications:

- classification,

- predicting the next upcoming value,

- anomaly detection,

- and discovering structure.

From these four classes Anomaly detection helps to detect data points from the dataset that do not fit well or not behaving normally with the rest of the data. The applications for this particular class are fraud detection, surveillance, diagnosis, data cleanup, and predictive maintenance etc.

The advent of IoT and anomaly detection is now playing a key role in IoT applications as well such as monitoring and predictive maintenance.

Modern businesses are now becoming dependent on the data and they try to forecast their sales with the base of these new technologies and they have started understanding the importance of interconnected operations to get the full schema of their business.At the same time they need to respond and take actions to fast-moving changes in data promptly. Especially if we talk about the cybersecurity threats and rapid changes in their domain. To solve these heart throbbing problems anomaly detection can be a key or a bright side for solving such intrusions. And unfortunately, we have no other effective way to handle and analyze constantly growing datasets manually. In constantly changing data “normal” behavior is redefining each and every moment, and a new effective, proactive approach to identify anomalous behaviour can be find using these concepts.

So to get and use all the features anomaly detection is needed to identify the anomaly of the data.

Types of Anomaly detection

These anomalies can be divided into three categories:

- Point Anomaly: An individual data instance in a dataset is considered to be a Point Anomaly if it belongs to far away from the rest of the data.

Example: Sudden transaction of huge amount from a credit card

- Contextual Anomaly: If a particular observation is anomalous from other data points then this is called a Contextual Anomaly. Or if the data is an anomaly because of the context of the observation.

- Collective Anomaly: Collective Anomaly are known as a set of data instances that help in finding an anomaly. This type of anomaly has two variations.

- Events are in unexpected order

- Unexpected value combinations

Benefits and application of anomaly detection:

Artificial Intelligence helps our human resources to handle the elastic environment of cloud infrastructure, microservices and containers. We use artificial intelligence concepts everywhere to overcome these challenges.

So let me give you some examples of anomaly detection,

Suppose in the automation Industry they start using AI-driven anomaly detection algorithms that can automatically analyze and understand the datasets. These algorithms are too efficient for that they can dynamically fine-tune the parameters of normal behavior and identify breaches in the patterns.

Real-time analysis: In the real time analysis industry also AI solutions can interpret data activity in real time. Basically here AI tries to check the pattern and compare them. At any moment if a pattern is not recognized by the system, then the system will send a signal as soon as possible.

Scrupulousness: In this application of anomaly detection basically helps to provide an end-to-end gap-free monitoring which can go through minutiae of data. By this application we can identify the smallest anomalies in the data which is almost impossible for a human eye to find out.

Accuracy:When it comes to comparing the accuracy between AI and the human resource we always notice that Artificial Intelligence is far better to deal with anomaly detection. Artificial Intelligence helps to enhance the accuracy of anomaly detection with avoiding nuisance alerts and false positives/negatives triggered by static thresholds.

Self-learning: We all have the idea about self driving cars and Tesla Self driving cars are also very famous so this industry is also using Artificial Intelligence concepts. So the heart of this industry is based on AI-driven algorithms which constitute the core of self-learning systems. These systems are able to learn from data patterns and deliver predictions or answers as required.

Machine learning methods to do anomaly detection:

What is Machine Learning?

Machine learning is a sub-set of artificial intelligence (AI) that allows the system to automatically learn and improve from experience without being explicitly programmed

Three types are there in machine learning:

- Supervised

- Unsupervised

- Reinforcement learning

What is supervised learning?

From the name itself, we can understand supervised learning works as a supervisor or teacher. Basically, In supervised learning, we teach or train the machine with labeled data (that means data is already tagged with some predefined class). Then we test our model with some unknown new set of data and predict the level for them.

What is unsupervised learning?

Unsupervised learning is a machine learning technique, where you do not need to supervise the model. Instead, you need to allow the model to work on its own to discover information. It mainly deals with the unlabeled data.

What is Reinforcement Learning?

Reinforcement learning is about taking suitable action to maximize reward in a particular situation. It is used to define the best sequence of decisions that allow the agent to solve a problem while maximizing a long-term reward.

Machine Learning and Outlier Analysis

What is an outlier?

An outlier in the data basically stands for identifying any data object or point that significantly deviates from the remaining data points. In the concept of data mining, outliers are commonly considered as an exception or simply noise to the data. But the same process cannot be applied in anomaly detection, hence the emphasis on outlier analysis.

Let me give you an example about performing anomaly detection using machine learning. This method is the K-means clustering method.

What is K means clustering?

K-means clustering is a part of unsupervised learning in the machine learning algorithm. This algorithm is used for the unlabeled data (i.e.,The data without defined categories, class, or groups). The goal of this K-means algorithm is to find the specific groups in the data, with the number of groups represented by the variable K. The algorithm normally works iteratively to put and classify each data point to one of K groups based on the features the data points have. These data points are clustered based on feature similarity.

The results we got from the K-means clustering algorithm are:

- The centroids of the K clusters, which are used to label new data

- Labels for the each data points in the training data (each data point is assigned to a single cluster)

So this algorithm K-means clustering method is applied to detect the outlier based on their plotted distance from the closest cluster.

K-means clustering method helps the form the different types of clusters for each data point in the dataset with a mean value. Normally we observe that objects within a cluster have the closest mean value. Any object if it has the threshold value greater than the nearest cluster mean value then that is identified as an outlier.

Step-by-step method implementation to use K-means clustering:

- First calculate the mean value of each cluster

- Then try to set an initial threshold value

- When the testing process is going in that time try to determine the distance of each data point from the mean value

- Find or Identify the cluster which is nearest to the test data point

- If we observe that the “Distance” value is more than the “threshold” value, then we can conclude that it is an outlier.

- Supervised Anomaly Detection:

As we are already familiar with that, the supervised learning method needs a labeled dataset. This dataset can contain both the normal and the anomalous samples to construct a predictive model. This dataset helps us to classify future data points. The most commonly and famous used algorithms for this purpose are supervised learning processes like, Support Vector Machine learning, Machine learning modelling, K-Nearest Neighbors Classifier, etc.

- Unsupervised Anomaly Detection:

So in the beginning of this tutorial we have learned about unsupervised learning as well.

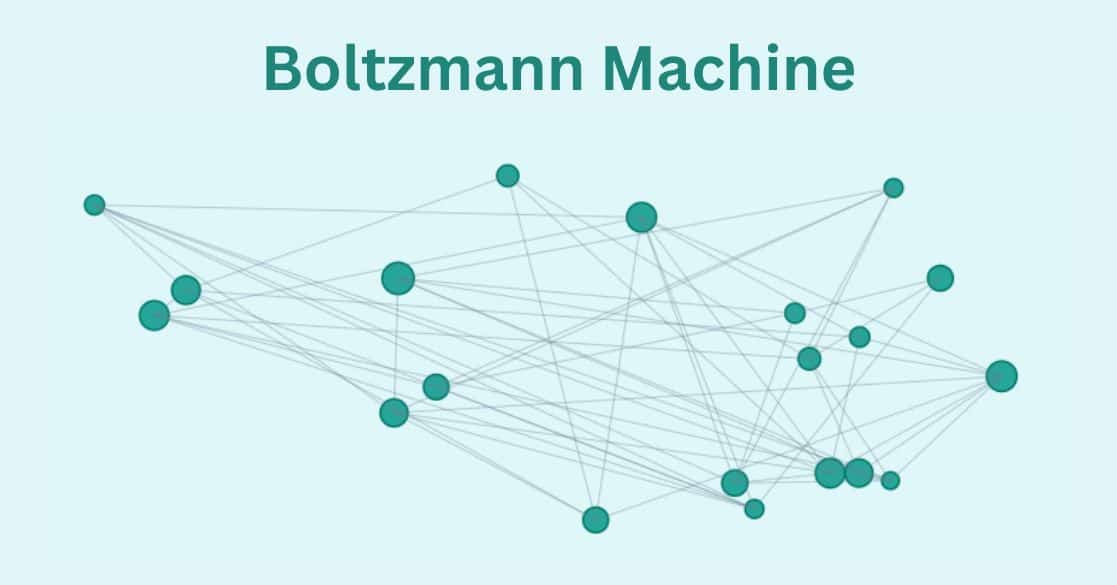

Unsupervised anomaly detection algorithms are divided into some parts like:

(1) Nearest-neighbor based techniques,

(2) Clustering-based methods and

(3) Statistical algorithms.

This learning process does not require any training data and instead of that it automatically assumes two things about the data:

A very small percentage of data is anomalous in the whole dataset

And the second one is that any anomaly is statistically different from the normal samples.

Based on these two assumptions, the data is then process to cluster using the similarity measurement and the data points in the dataset which are far away from the cluster they are considered as the anomalies.

Project with anomaly detection:

Credit card Fraud Analysis:

Project: Credit card Fraud Analysis using Data mining techniques

IN today’s world, we are literally sitting on the express train to become a cashless society. As per the World Payments Report, in 2016 total non-cash transactions increased by 10.1% from 2015 for a total of 482.6 billion transactions! That’s huge! Also, it’s expected that in future years there will be a steady growth of non-cash transactions.

As this is a blessing on the other hand it becomes a curse for this cashless society because of the immense number of fraud transactions even if EMV smart chips are also implemented.

So our data scientists are trying to come up with one of the best solutions to make a model for predicting fraud transactions.

Import the libraries:

import pandas as pd import numpy as np import matplotlib import matplotlib.pyplot as plt %matplotlib inline import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split from sklearn.metrics import classification_report, accuracy_score from sklearn.metrics import confusion_matrix from sklearn.linear_model import LogisticRegression from sklearn import metrics import seaborn as sns

Collect the Data

I collected the data from Kaggle dataset.

Dataset description:

- It contains 285,000 rows of data and 31 columns.

- The most important columns are

- Time,

- Amount,

- and Class (fraud or not fraud)

data_df=pd.read_csv('/content/creditcard 2.csv')

data_df.head()

Data understanding:

This method is used to display basic statistical details like

data_df.describe()

- Percentile,

- mean,

- std

- etc. of a data frame or a series of numeric values.

For example, we only took the Amount, Time, and the Class columns.

- data_df.isna().any(): This method is used to Check the null values in the dataset.

data_df.isna().any()

False stands for we don’t have any column with null values.

- Display the percentage of total null values in the dataset:

Just to reconfirm that we don’t have any null values in the dataset so that percentage calculation is done.

- Find out the percentage of total not fraud transaction in the dataset:

data_df[‘Class’] = 0 Not a fraud transaction data_df[‘Class’] = 1 Fraud transaction

nfcount=0

notFraud=data_df['Class']

for i in range(len(notFraud)):

if notFraud[i]==0:

nfcount=nfcount+1

nfcount

per_nf=(nfcount/len(notFraud))*100

print('percentage of total not fraud transaction in the dataset: ',per_nf)

So in this data 99.82% of data are for normal transactions.

- Find out the percentage of total fraud transaction in the dataset:

data_df[‘Class’] = 0 Not a fraud transaction data_df[‘Class’] = 1 Fraud transaction

fcount=0

Fraud=data_df['Class']

for i in range(len(Fraud)):

if Fraud[i]==1:

fcount=fcount+1

fcount

per_f=(fcount/len(Fraud))*100

print('percentage of total fraud transaction in the dataset: ',per_f)

0.172% of data holds the fraud transaction record.

Data Visualization:

Now we will visualize the data through the graph to understand more intuitively.

- Plot Fraud transaction vs genuine transaction:

plt.title("Bar plot for Fraud VS Genuine transactions")

sns.barplot(x = 'Fraud Transaction', y = 'Genuine Transaction', data = plot_data, palette = 'Blues', edgecolor = 'w')

As per the graph we can say the ratio of genuine transactions are higher than fraud transactions.

- Plot Amount Vs Time:

x=data_df['Amount']

y=data_df['Time']

plt.plot(x, y)

plt.title('Time Vs amount')

#sns.barplot(x = x, y = y, data = data, palette = 'Blues', edgecolor = 'w')

In this graph we try to plot the relation between Time and the amount.

- Amount distribution curve:

plt.figure(figsize=(10,8), )

plt.title('Amount Distribution')

sns.distplot(data_df['Amount'],color='red');

From this amount distribution curve it is shown that the number high amount transactions are very low. So there is a high probability for huge transactions to be fraudulent .

Find the correlation between all the attributes in the Data:

# Correlation matrix correlation_metrics = data_df.corr() fig = plt.figure(figsize = (14, 9)) sns.heatmap(correlation_metrics, vmax = .9, square = True) plt.show()

Correlation metrics help us to understand the core relation between two attributes.

Find the outliers in the dataset:

An outlier is an observation that helps to find the abnormal behaviour in the dataset.

Modelling:

To start with modelling First we need to split the dataset

- 80% → 80% of the data will use to train the model

- 20% → 20% to validate the model

x=data_df.drop(['Class'], axis = 1)#drop the target variable y=data_df['Class'] xtrain, xtest, ytrain, ytest = train_test_split(x, y, test_size = 0.2, random_state = 42)

First model we will start with Linear regression model:

What is a Linear regression model?

Linear regression is a type of supervised algorithm used for finding linear relationships between independent and dependent variables. Finds relationship between two or more continuous variables.

This algorithm is mostly used in forecasting and predictions and shows the linear relationship between input and output variables, so it is called linear regression.

Equation to solve linear regression problems:

Y= MX+C

Where, y= Dependent variable

X= independent variable

M= slope

C= intercept

I hope we got a brief introduction about Linear regression now start implementing and training the model.

Here we call the linear regression method from scikit learn library and fit the model.

from sklearn.linear_model import LinearRegression linear =LinearRegression() linear.fit(xtrain, ytrain)

Now comes the prediction part:

y_pred = linear.predict(xtest)

table= pd.DataFrame({"Actual":ytest,"Predicted":y_pred})

table

In this part we will provide test data to understand the model performance.

As per, the accuracy score we can say our model’s prediction is not good enough.

So we can try some other algorithms to predict the fraud transaction:

Logistic Regression:

What is Logistic Regression?

Logistic regression is also a part of supervised learning classification algorithm. It is used to predict the probability of a target variable and the nature of target or dependent variable is discrete, so for the output there will be only two class will be present

- The dependent variable is binary in nature so that can be either 1 (stands for success/yes) or 0 (stands for failure/no).

- Logistic regression is also known as sigmoid function

- Sigmoid function = 1 / (1 + e^-value)

- Implement and train the model:

logisticreg = LogisticRegression() logisticreg.fit(xtrain, ytrain)

- Predict the new data using Logistic Regression model:

y_pred = logisticreg.predict(xtest)

table= pd.DataFrame({"Actual":ytest,"Predicted":y_pred})

table

According to the accuracy score Logistic regression works pretty well because predicting fraud transactions is a classification problem.

So, this is one method to predict the fraud transaction but also there are many methods and algorithms are there to solve this problem.

In this article, we covered what is anomaly detection and how can we use machine learning to perform such tasks.Here is a chance for you to get a free course about machine learning , click the banner to know more

Find Machine Learning Course in Top Indian Cities

Chennai | Bangalore | Hyderabad | Pune | Mumbai | Delhi NCROur Machine Learning Courses

Explore our Machine Learning and AI courses, designed for comprehensive learning and skill development.

| Program Name | Duration |

|---|---|

| MIT No code AI and Machine Learning Course | 12 Weeks |

| MIT Data Science and Machine Learning Course | 12 Weeks |

| Data Science and Machine Learning Course | 12 Weeks |